Monitoring intervention fidelity of a lifestyle behavioral intervention delivered through telehealth

Introduction

The rapid growth in digital and mHealth technology over the last 20 years has led to a rise in technology-based interventions for improving health behaviors such as diet, exercise, smoking cessation and sleep. Technology-based interventions (i.e., telehealth, mHealth, eHealth, and/or digital health) employ numerous advantages over traditional clinical settings such as convenience, cost, and the ability to tailor plans and feedback to a participant’s individual needs, making them attractive alternative models to traditional clinical interventions. Nonetheless, telehealth interventions also present challenges such as absence of face-to-face interaction, and unforeseen difficulties in technology receipt, adoption, and intention to use the technology (1). Due to these difficulties, there has been much scrutiny regarding the real factors contributing to change in this type of intervention (2).

Because of the myriad factors at play in a telehealth behavioral intervention, intervention fidelity monitoring is of particular importance. Intervention fidelity refers to the methodological strategies used to monitor and enhance the delivery and receipt of behavioral interventions. Monitoring fidelity is vital for ensuring internal validity and optimizing external validity of behavioral intervention trials. Inadequate monitoring of fidelity can lead to misinterpretation of trial results by inflating both Type I and Type II errors due to the introduction of unmeasured confounding variables such as differential treatment dose.

Despite the importance of fidelity in behavioral interventions in general, and in technology-based interventions specifically, few behavioral trials fully report intervention fidelity protocols, or the measures by which adherence to fidelity protocols are monitored. In a systematic review of health behavior interventions delivered through technology, Shingleton found only 2 of 41 (4.9%) studies reported any type of treatment fidelity data (3). Another systematic review of mHealth physical activity interventions found only 2 of 15 trials (13.3%) reported any measures pertaining to intervention fidelity (4). Furthermore, after examining 72 studies for fidelity quality, Slaughter described the fidelity documentation in health interventions across the board as “poor”, consistent with the findings of other systematic reviews on the topic (2).

The current gap regarding fidelity protocol development and implementation in the literature contributes to difficulty in replication and translation of results into practice. Thus, the purpose of this paper is to describe the intervention fidelity protocol for the 24-START study, a behavior change intervention delivered through the telephone and internet, and discuss the results of a pilot audit undertaken to determine feasibility of monitoring adherence to the protocol. The protocol was developed to address the five areas of intervention fidelity outlined by the NIH Behavior Change Consortium (NIH BCC): Design of Study, Provider Training, Delivery of Treatment, Receipt of Treatment, and Enactment of Treatment (5).

Methods

24-START study overview

The 24-week Study Targeting Adherence to Regimented Diets using Telehealth (24-START) is an ongoing randomized controlled trial of a behavioral intervention delivered via telephone and internet. The primary aim of 24-START is to examine the impact of diet composition on dietary adherence in a sample of adults with spinal cord injury over a six-month period. All participants are primarily wheelchair users and have a BMI between 25–55 kg/m2. Once enrolled, participants are randomly assigned to either a low carbohydrate diet or a low fat diet for 24 weeks. Over the course of the six-month intervention, participants are asked to: (I) employ self-monitoring strategies by tracking food intake, exercise, and hydration daily and tracking weight weekly using a website and app; (II) engage in regular phone calls with a telehealth coach to discuss progress toward diet, exercise, and hydration goals; and (III) engage with personalized resources such as meal planning resources, recipes, and exercise videos which are recommended by the coach and distributed through the website and mobile app. Phone calls occur weekly for the first three months, and bi-weekly for the last three months. All telehealth coaches are trained in Motivational Interviewing (MI) (6) and cognitive behavioral therapy techniques. Telehealth coaches serve as a form of accountability in addition to helping participants explore internal motivation, discuss behavior change strategies, barriers to change, and answer any questions. The 24-START trial was approved by the Institutional Review Board (protocol number 151001005) and all participants sign written informed consent prior to enrollment. The trial is registered with the United States National Library of Medicine trial registry (clinicaltrials.gov, NCT02630524).

Intervention fidelity protocol

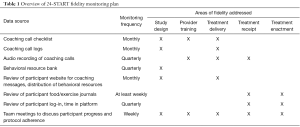

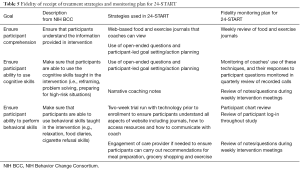

The following sections outline the five NIH BCC areas of fidelity and the corresponding protocols developed for the 24-START study. In addition to the development of intervention fidelity protocols, a fidelity monitoring plan was also developed to ensure adherence to the fidelity protocols. Because fidelity strategies are only effective if rigorously implemented, the monitoring plan is intended to ensure the fidelity strategies developed are consistently executed throughout the duration of the study. An overview of this monitoring plan can be found in Table 1.

Full table

Fidelity of study design

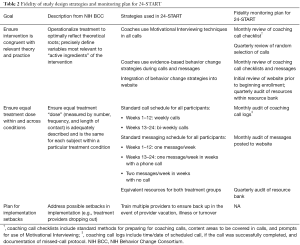

The NIH BCC defines fidelity of study design as those practices intended to ensure that a study can adequately test the stated hypotheses in relation to underlying theory and clinical processes. Specifically, the BCC outlined three goals for fidelity of study design: ensuring that interventions are congruent with relevant theory and practice, ensuring equivalent treatment dose within and across conditions, and planning for implementation setbacks (Table 2).

Full table

Ensure interventions are congruent with relevant theory and practice

Two key foundational design principles of the 24-START intervention include MI and the delivery of evidence-based behavior change strategies as defined by the behavior change taxonomy (BCT) (6,7). These techniques are essential components for promoting dietary adherence in both treatment groups in 24-START. Several strategies were developed to ensure these components were implemented as designed. For MI delivery, telehealth coaches are expected to use MI techniques in every coaching call. Two fidelity measures were designed to track use of MI in calls. First, coaches use a coaching call checklist to remind them of key aspects of MI to use in calls. These aspects include open-ended questions, listening more than speaking, using reflective and summary statements, and allowing participants to set their goals and develop action plans. New coaches complete a checklist during every call, while experienced coaches complete a checklist during every 4th call. Additionally, coaching calls are audio recorded for review of MI techniques by the coaches and investigators.

Evidence-based behavior change strategies are delivered through the website discussion board messages, coaching call sessions, and resources provided on the website. In addition to cuing coaches to use MI, the coaching call checklists also prompt coaches to review behavior change strategies and provide additional behavioral resources in each call.

Adherence to these fidelity measures is actively monitored through monthly audits of coaching call checklists and messages posted in the discussion board to assess if MI is being implemented as planned. These audits also provide insight into whether behavior change techniques are being emphasized by the coaches. A random 10% sample of all active participant files are chosen for each audit. Recordings of coaching calls are audited quarterly. A random selection of 10% of all phones calls completed by each coach are transcribed, and a review of MI techniques is completed by auditing staff. Lastly, the resources available to participants on the website are monitored quarterly to ensure behavior change techniques are incorporated into the intervention.

In 24-START, three main mediums comprise the treatment dose: (I) telehealth coaching calls; (II) website discussion board messages from the telehealth coach; and (III) resources delivered through the website to assist with behavior change. To ensure equal dosing, the study is designed for participants in both treatment groups to receive the same number and frequency of phone calls, messages, and resources over the course of the intervention. Calls occur weekly for the first twelve weeks, and bi-weekly for the last twelve weeks. The duration of phone calls may vary weekly depending on participant needs, but should generally last twenty to forty-five minutes regardless of group assignment. In addition to the regular phone calls, a secondary contact from the coach is to be provided throughout the duration of the study via a website discussion board. During weeks when calls are scheduled, coaches deliver one message, and on weeks when no call is scheduled coaches deliver two messages. Finally, participants receive equivalent resources regardless of group assignment for the first five weeks, and after that, coaches have access to equal group-specific resources available to dispense as needed, meaning there are the same number of low carbohydrate specific resources and low fat specific resources available for distribution.

Adherence to treatment dosing is monitored by monthly reviews of a 10% random sample of coaching call logs, which contain records of when the phone calls and messages were delivered. Quarterly audits for resource equity are performed to ensure that both treatment groups have the same number and topics of resources to assist with dietary adherence and behavior change.

Plan for implementation setbacks

Planning for study design implementation setbacks allows investigators to remedy any deviations from the original study design. Because the telehealth coaches are the main mechanism by which the intervention is delivered, the primary fidelity strategy developed for 24-START is to train multiple telehealth coaches on the protocol to mitigate any potential implementation setbacks that may arise from telehealth coach transition, sick leave, or vacation.

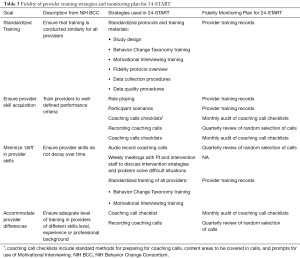

Provider training

The NIH BCC defines fidelity in provider training as ensuring all treatment providers have been satisfactorily trained to deliver the intervention (Table 3). Because providers are frequently the front line for delivering the study as designed, a provider fidelity protocol is essential to ensure adequate training is completed for all providers. For 24-START, the providers of the intervention are the telehealth coaches.

Full table

Standardized training

The NIH BCC suggests standardized training for all providers to boost provider training fidelity. In 24-START, all coaches receive the same training prior to delivering the intervention to participants. This training entails six components including: familiarization with the study design through studying and discussing the grant, a completion of the BCT training which takes coaches through six modules of learning and assessments regarding evidence-based behavior change strategies, Motivational Interview training, shadowing other coaches through data collection procedures, and training in fidelity protocols and data quality procedures (6,7). Training records are kept to ensure each provider completes all training.

Ensure provider skill acquisition

Provider training provides the foundation for initial skill development, yet ensuring skill acquisition is necessary to ensure providers provide the intervention as designed. The main skills needed in the 24-START study are an understanding of the dietary guidelines for each treatment group, MI techniques and evidence-based behavior change techniques to assist participants with behavior change. Several strategies are built into 24-START to solidify skill acquisition including role-playing, and walking through potential scenarios that may arise when telehealth coaching. New coaches also observe experienced coaches utilizing MI and behavior change techniques during live phone calls. The coaching call checklists previously described also work to reinforce skills learned once training is complete by providing coaches with prompts in the areas of content and communication styles to use in each call. Lastly, skill acquisition is monitored through periodic recordings of phone calls.

This portion of the fidelity protocol is monitored via monthly audits of coaching call checklists and quarterly reviews of a random selection of phone recordings. In the event an audit reveals a lack of skill acquisition, the PI is notified and can assist coaches with additional training as needed.

Minimize ‘drift’ in provider skills

Each participant in 24-START is engaged in the intervention for six months, with a total 18 coaching calls over the duration of the study. Because of the length and intensity of the intervention, monitoring coaches’ skills over time is critical to ensure the intervention is implemented consistently with each participant over each coach-to-participant contact. 24-START includes several preventive measures to prevent provider drift such as self-monitoring, audio-recordings, and team collaboration. Coaches are expected to self-monitor their skills using the coaching call checklists described above. New coaches must use the checklist for every call until they have successfully coached three participants. Experienced coaches use the checklist for every fourth call performed. The quarterly review of audio-recordings also acts as a barrier to drift allowing the PI to assess provider skills throughout the data collection period. The third measure in place to help minimize drift is weekly team meetings involving the coaches and PI. These meetings contain built in time to discuss any issues with coaching, enable staff to learn from one another, problem solve together, and discuss MI and behavior change strategies together.

Accommodate provider differences

Because differences such as professional background and previous experience can affect the way study staff implement an intervention, the NIH BCC recommends building in measures to ensure provider training is adequate and appropriate.

Within 24-START, the standardized training previously mentioned allows for the specific skills required for intervention delivery to be honed and accentuated within providers regardless of inherent differences. All telehealth coaches are also required to possess a bachelor’s degree or higher along with some background in nutrition, exercise, and behavior change techniques. Beyond initial credentialing and training, the coaching call checklists ensure coaches deliver the active ingredients of the intervention opposed to extraneous elements from past training or personal experiences. The coaching call recordings and subsequent auditing provide greater insight into any provider differences that should be accounted for when considering participant outcomes.

Delivery of treatment

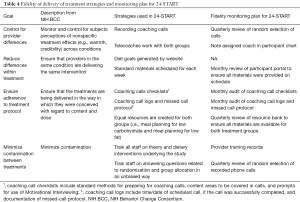

Fidelity of treatment delivery refers to ensuring the intervention is delivered as intended (Table 4). The NIH BCC outlines four goals for fidelity of treatment delivery: control for provider differences, reduce differences within treatment groups, ensure adherence to treatment protocols, and minimize contamination between conditions.

Full table

Control for provider differences

Differences between coaches have potential to influence participant behavior. Work style, credibility, or personality differences may inadvertently affect participant treatment adherence. The primary strategy utilized in 24-START to control for participant perceptions of nonspecific treatment effects is to assign participants from both conditions to the same coach. By allowing coaches to interact with participants from both the low carbohydrate and the low fat diet groups, variance from provider differences will be distributed between both groups. Provider differences are monitored through the quarterly review of audio recording calls. Provider differences that may influence treatment delivery can be pointed out and addressed in on-going staff trainings. Finally, coach assignment will be accounted for in data analysis.

Reduce differences within treatment

Within 24-START, this area of fidelity focuses on two areas of the intervention: diet prescription and behavioral resources. Participants are assigned a diet prescription (i.e., daily calorie level, carbohydrate or fat goals) based on measured metabolic rate and random treatment assignment. The procedures for calculating treatment goals are standardized according to group assignment and all calculations are performed by the coaching platform. This reduces the potential for human error and differences within treatment groups.

All participants within the same treatment are provided with the same resources for the first five weeks to ensure participants are equally equipped to adhere to their diet assignment. These materials include treatment-specific content that explores the benefits of that particular treatment, general information about diet and exercise, suggestions for getting started on the diet, and strategies for behavior modification to assist with adherence. Coaches encourage participants to review the resources during coaching calls and discuss specific resources as needed during the coaching calls. The delivery of these materials occurs digitally over the website. Monitoring these strategies occurs by monthly reviews of 10% of all participant profiles to ensure resources are provided according to the prescribed timeline.

Ensure adherence to treatment protocol

Several strategies are in place to ensure both groups receive the treatment dose and content as designed. These strategies include regular use of the coaching call checklist as previously described (during all calls for new coaches and every fourth call for experienced coached), and coaching call logs. Call logs ensure that all participants are receiving equivalent number and frequency of calls by tracking the dates and times of scheduled interactions as well as coach adherence to the missed call protocol. The checklists and call logs are audited monthly.

Minimize contamination between treatments

Because 24-START is an individual-level intervention carried out within the digital environment, chances of participants from different treatment groups interacting is less than traditional clinic-based interventions. Additionally, all in-person data collection is scheduled in a way to avoid contamination. Only one participant is scheduled for data collection each day, and all in-person visits are performed before randomization (baseline measures) or after completion of the study (final measures). Mid-point measures include only computer-based questionnaires and telephone interviews for dietary recalls. Upon randomization, participants are assigned to the appropriate diet group within the coaching platform. This prevents human error of the distribution of diet goals to each participant.

Given the telehealth delivery of the intervention, the primary sources of contamination are coaches giving advice or resources intended for the other diet group. Coaches are expected to provide the same level of expertise to participants in both the low carbohydrate and low-fat diet groups. To minimize the possibility of coaches delivering components of one treatment to the other, coaches are trained on the theory and specific dietary elements essential for adherence for both treatments. Coaches are also trained to provide unbiased coaching for participants. Standardized training and the quarterly review of recorded phone calls acts as a monitoring step to detect contamination.

Receipt of treatment

Fidelity of treatment receipt refers to the steps taken to ensure participants have the ability to understand and perform treatment-related behavioral and cognitive strategies (Table 5). Participant receipt of treatment is crucial for telehealth studies. Unlike clinic-based behavioral interventions where participants regularly engage in face-to-face contact with providers, telehealth involves minimal in-person contact and many interactions rely on written or verbal communication where providers may miss nonverbal cues of comprehension. For 24-START, participants only interact with coaches in-person during baseline data collection period, when coaches introduce them to the technology they will be using for the study. Therefore, monitoring participant comprehension and their ability to perform cognitive and behavioral tasks assigned depends heavily on the digital tools used within the intervention.

Full table

Ensure participant comprehension

Study outcomes should ideally be interpreted under the assumption that all participants fully understood what was being asked of them during the intervention. Strategies to ensure participant comprehension of treatment protocols increase the likelihood that participant outcomes are a reflection of the effectiveness or ineffectiveness of the treatment, rather than a product of variances in participant comprehension. All participants enrolled in 24-START are asked to record their diet, exercise, and hydration daily on a web-based food journal viewable by the health coaches. Coaches review these journals weekly and engage in dialogue with participants regarding the contents of their journal during coaching sessions. Poor participant comprehension is often revealed in the content of the journal. If a coach suspects lack of comprehension during a call, they can monitor the journal throughout the week and provide additional prompts on journal entries, or additional educational resources to improve understanding. Additionally, coaches are trained to utilize open-ended questions that illicit cues to understanding from participants. Finally, coaches encourage participants to set their weekly goals and action plans. This allows coaches to determine comprehension of the dietary prescription and behavioral strategies discussed during the coaching sessions. These strategies are monitored during coaches’ weekly reviews of journals, as well as during reviews of participant progress during weekly intervention meetings.

Ensure participant ability to use cognitive skills

Behavioral interventions such as 24-START encourage participants to employ cognitive skills such as problem solving, goal setting, and action planning to meet their diet, exercise, and hydration goals. The use of MI enables coaches to gauge participants’ ability to use these cognitive skills through the use of open-ended questions and participant led goal-setting. Indicators of inadequate cognitive skills include participants setting vague or ambiguous goals, continual failure to meet goals, resistance to goal progression, or ongoing reporting of the same barriers to goal achievement. The use of MI is monitored through the quarterly review of recorded phone calls. Detailed confidential notes taken by coaches after each phone call also serves as a fidelity strategy to ensure participant utilization of these cognitive skills. These narrative notes provide space for coaches to analyze the participant’s progress and are a valuable source of qualitative data that can be used to interpret participant outcomes.

Ensure participant ability to perform behavioral skills

Several behavioral skills are needed to successfully participate in the 24-START study. Participants are expected to engage with technology on a daily basis, participate in regular exercise, and possess the ability to prepare or have a care provider prepare meals in accordance with their treatment condition. The online and telephone based study design presents challenges to ensuring participant ability to perform all behavioral skills since actual observations of participant daily behavior is outside the scope of the study design. Despite these challenges, a two-week trial period is built into the intervention timeline where participants are asked to familiarize themselves with the web-based journals, learn how to communicate with coaches digitally, and learn how to access the resources provided via the website prior to receiving their treatment condition. After the two-week period any questions regarding these behavioral skills are addressed with coaches. Participant ability is continually assessed throughout the duration of the study by regular reviews of participant journals and charts. It is not uncommon in the spinal cord injury population for care providers to assist with the implementation of the behavioral skills and thus coaches may also communicate with participant care providers if appropriate to assess participant ability.

Enactment of treatment

Fidelity of treatment enactment pertains to the participants’ execution of cognitive and behavioral skills during the intervention. Fidelity of treatment enactment is important to ensure that participants are regularly engaging with cognitive and behavioral skills highlighted during treatment (Table 6).

Full table

Ensure participants use of cognitive skills

As previously discussed, cognitive skills such as problem solving, goal setting, and action planning are emphasized to help participants meet their diet, exercise, and hydration goals. The main way adherence to these cognitive skills is assessed is through the coaching call sessions. MI techniques used by coaches naturally prompt conversation about cognitive skills such as discussion of problem solving or action plans. The frequency of the calls permits coaches to follow up with participants in a timely manner regarding any new strategies or goals expressed by participants in previous phone calls. Discrepancies between participant’s verbalized intentions to utilize cognitive skills and their actual use of cognitive skills can be addressed as needed during the coaching sessions.

Ensure participant use of behavioral skills

Similar to monitoring cognitive skills, the regular coaching calls are one of the main strategies in place to ensure participants use behavioral skills. Furthermore, weekly reviews of participants’ web-based journals prompt coaches to monitor participant fidelity to behaviors such as self-monitoring. Blank or incomplete journals raise questions about participant adoption of behavioral skills and coaches are trained to address the journals weekly with participants. In addition to self-monitoring, participants are asked to engage with the technology provided and utilize the behavior change resources distributed. Periodic checks of participant logins to the online platforms, as well as the time spent in various sections of the platform, provide insight into participant engagement with these resources.

Fidelity monitoring pilot test

A pilot audit was conducted to test the feasibility of the intervention fidelity monitoring plan. The audit focused on the following three measures of fidelity: coaching call checklists, coaching call logs and behavioral resource bank review. A review of the coaching platform was also completed to determine current progress on fidelity of treatment receipt and treatment enactment. The two staff members who conducted the audit were not involved in the development of the intervention fidelity protocol or intervention implementation. Because the pilot audit was conducted early in the study when only a small number of participant (n=9) had been enrolled, the pilot audit was conducted on all participant charts currently enrolled rather than a 10% sample.

Results

The pilot audit included nine participant charts, covering a total of 104 weeks of intervention.

Coaching call checklists

Thirty-four coaching call checklists were reviewed to assess coaches’ preparation for calls, areas of behavior change covered during calls, goals and progress tracking, and use of MI techniques during calls (elements of fidelity of study design, provider training, and treatment delivery). Of the 34 calls reviewed, 5 (14.7%) were flagged as a possible fidelity protocol deviation. Three of the five were flagged due to incomplete documentation of the call, indicating that protocol may have been followed, but documentation was insufficient to confirm adherence.

Coaching call logs

Coaching call logs were reviewed to assess the completion rate of scheduled coaching calls (elements of fidelity of study design and treatment delivery). Of 84 scheduled calls, 78 (89%) were successfully completed, meaning the coach and participant completed a health coaching session. Of the six calls that were not completed, the missed call protocol was correctly followed four times, indicating that the telecoaches adhered to the protocol and the participant was unavailable at the scheduled time. In the two remaining calls, the documentation of protocol lacked sufficient clarity to determine if the protocol was followed.

Coaching platform

The review of the coaching platform focused on two areas: dietary prescriptions to assess treatment dose and coach-to-participant secondary contacts to assess treatment dose. All participants reviewed received dietary prescriptions as designed. A total of 103 secondary contact points were scheduled, and 77 (74%) were successfully made.

Behavioral resource bank

Auditors assessed the equity among resources available for participants in both the low carbohydrate and the low fat groups (fidelity of study design). All resources available for the intervention were found to be equal for both groups.

Discussion

Intervention fidelity is an important component to establishing the validity of any behavioral intervention, and it is particularly important for technology-based interventions given the inherent variability within and across these interventions. The purpose of this paper was to provide an overview of the intervention fidelity protocol for an on-going randomized controlled trial of a technology-based behavioral intervention for adults with spinal cord injury, and discuss the plan for monitoring adherence to this protocol.

Monitoring plans for intervention fidelity within telehealth interventions must be planned early, and there must be flexibility to change fidelity strategies that are not working. Throughout the process of applying the 24-START fidelity protocol to the NIH BCC fidelity framework, investigators discovered areas of fidelity that had not been addressed. For example, during planning, it was discovered that no written protocol was in place to monitor primary contacts (phone calls) and secondary contacts (written messages to participants delivered via the coaching platform). The pilot audit also revealed that several areas of the protocol lacked adequate documentation. Specifically, there was a protocol in place for handling missed phone calls, however there was no standard documentation procedure related to this. The pilot study indicated that the protocol was followed in 66% of missed calls, however in 33% of the cases there was not sufficient documentation to determine how the coach handled the missed calls. To address this, protocol developers revised documentation forms to clearly show adherence to the protocols.

Our fidelity monitoring plan also highlights the importance for technology-based interventions to include all members of the research team in fidelity protocol planning to ensure that there is a clear understanding of the data points that will be required and the frequency with which data are needed. This includes members of the technology team, intervention staff, and scientific investigators. Our pilot audit revealed that some data collected directly from the coaching platform was in a format that could not be used for monitoring fidelity (i.e., total number of site visitors but not number of log-ins per participant). Collaboration with the technology team was imperative to fully implement the fidelity protocols and data collection tools needed. A clear understanding of how and when data will be collected from technology is especially important for health promotion interventions using publicly-available technology (wearable sensors, mobile apps, etc.) where raw data of usage time may not be available. Considerations for fidelity monitoring will be needed to determine what data is available, the format of this data, and if proxy measures will be required.

One advantage of telehealth interventions is the potential to automate fidelity strategies, which can lead to increased protocol adherence and reduced staff time for monitoring adherence. In 24-START, participant log-ins are tracked, and messages to participants are time-stamped, allowing for easier review of treatment dose. Additional automation, such as embedding coaching call checklists within the coaching platform may further improve fidelity, but this capability was outside of the budget and time constraints of the current study. We have addressed these restraints by combining automation of some aspects of fidelity, while continuing with traditional manual monitoring of other aspects. Reviewers conducting the pilot test completed the full review of all measures (web-based and paper-based) in approximately 1 hour indicating that this combination of methods is feasible to maintain for the duration of the study.

Consistent monitoring of fidelity throughout an intervention can provide valuable insight into whether the intervention is being carried out as intended, and will allow investigators to take necessary steps along the way to remedy any deviations from the protocol. All telehealth interventions should develop comprehensive intervention fidelity monitoring protocols to improve adherence and ultimately uphold the integrity of the study design. These protocols should be shared to aid others with protocol development and fidelity monitoring in the future.

Acknowledgements

Funding: This work was supported by the Eunice Kennedy Shriver National Institute of Child Health and Human Development [grant number K01HD079582].

Footnote

Conflicts of Interest: The authors have no conflicts of interest to declare.

Ethical Statement: The 24-START trial was approved by the Institutional Review Board of University of Alabama at Birmingham (Protocol number 151001005) and all participants sign written informed consent prior to enrollment.

References

- Devito Dabbs A, Song MK, Hawkins R, et al. An intervention fidelity framework for technology-based behavioral interventions. Nurs Res 2011;60:340-7. [Crossref] [PubMed]

- Slaughter SE, Hill JN, Snelgrove-Clarke E. What is the extent and quality of documentation and reporting of fidelity to implementation strategies: a scoping review. Implement Sci 2015;10:129. [Crossref] [PubMed]

- Shingleton RM, Palfai TP. Technology-delivered adaptations of motivational interviewing for health-related behaviors: A systematic review of the current research. Patient Educ Couns 2016;99:17-35. [Crossref] [PubMed]

- Blackman KC, Zoellner J, Berrey LM, et al. Assessing the internal and external validity of mobile health physical activity promotion interventions: a systematic literature review using the RE-AIM framework. J Med Internet Res 2013;15:e224. [Crossref] [PubMed]

- Bellg AJ, Borrelli B, Resnick B, et al. Enhancing treatment fidelity in health behavior change studies: best practices and recommendations from the NIH Behavior Change Consortium. Health Psychol 2004;23:443-51. [Crossref] [PubMed]

- Miller W, Rollnick S. Motivational Interviewing: Helping People Change. 3rd ed. New York: The Guildford Press; 2013.

- Michie S, Wood CE, Johnston M, et al. Behaviour change techniques: the development and evaluation of a taxonomic method for reporting and describing behaviour change interventions (a suite of five studies involving consensus methods, randomised controlled trials and analysis of qualitative data). Health Technol Assess 2015;19:1-188. [Crossref] [PubMed]

Cite this article as: Sineath A, Lambert L, Verga C, Wagstaff M, Wingo BC. Monitoring intervention fidelity of a lifestyle behavioral intervention delivered through telehealth. mHealth 2017;3:35.