Evaluating and improving recruitment and retention in an mHealth clinical trial: an example of iterating methods during a trial

Introduction

Recruiting and retaining participants in clinical trials is of utmost importance to preserve validity and reliability of research results, yet it remains a challenge for most researchers. Estimates indicate that only half of randomized controlled trials successfully recruit their proposed sample size and only half of those do so on time (1). Investigations of effective recruitment and retention strategies are typically a byproduct of ongoing trials (2,3), but there are a few studies that have specifically evaluated improvement strategies. The consensus based on current evidence is that effective recruitment and retention often requires a structured, multifactor, and multilayered approach to reach a diverse audience (1,4,5). In fact, one review identified 137 strategies to recruit and retain participants ranging from sophisticated marketing to simple appointment reminders (2). Barriers to both recruitment and retention include not just individual variables such as lack of motivation to return, but also programmatic variables such as infrastructure and resources available to devote to such activities (6).

Some researchers have used social media, smartphone and web apps, and text messages as tools to improve recruitment and retention and indeed such web and mobile methods have demonstrated greater reach and speed (7-9). As most people carry a smartphone on their person, using mobile technologies now means that participants are accessible at almost any time and some researchers have noted an improvement in reaching the hard to reach or at-risk population (8,10). Health measurement and intervention delivered through mobile platforms are attractive in their scalability and cost most notably, but also present novel opportunities in the mHealth clinical trial space. For example, the use of apps that collect intensive longitudinal data make continuous monitoring of participant engagement possible. The speed at which adjustments in web and mobile apps can be completed also provides opportunities to complete rapid testing and iteration to optimize a desired outcome (11). Despite these opportunities, there is a paucity of research reporting, much less attempting, to optimize recruitment and retention in mHealth clinical trials.

However, novel concerns exist such as decreased commitment to engagement during a longitudinal trial, the potential for fake or duplicate participants, and potential for decreased efficacy, producing threats to validity of research findings (9,12-14). Such recruitment and retention threats are greater when all study procedures are delivered remotely. While doing so may be more convenient to both the participant and the study staff, it can make both enrolment and withdrawal far too easy, decreasing the commitment felt by the participant in the clinical trial. Thus, an examination of the factors associated with recruitment and retention in clinical trials that use web and mobile strategies is warranted.

The purpose of the present work is to describe an evaluation and iteration of recruitment and retention methods during an mHealth clinical trial with the goal of shortening the recruitment period and improving retention of randomized participants. To our knowledge, this is the first study to report a systematic evaluation of methods during an mHealth trial. Furthermore, it is also the first study to iterate methods during the trial and evaluate the effects of modification on the recruitment and retention of participants.

The NUYou study

NUYou (clinicaltrials.gov #NCT02496728) was a cluster randomized longitudinal trial with a primary aim of testing a remotely delivered mHealth intervention to preserve and promote cardiovascular health (CVH) compared to an mHealth intervention on other non-CVH behaviors. The study was approved by the Northwestern University Institutional Review Board (STU00200663). The recruitment goal for NUYou was 500 incoming freshmen. Out of 1,985 of the incoming class, 150 students enrolled in the trial.

NUYou recruitment

The initial recruitment tactics for the NUYou research study during the Fall of 2015 were as follows. During the summer, a letter and brochure for the study was included in the packet sent by the university to incoming freshmen. A banner was hung along the traditional entrance to campus and flyers were hung in dorm entrances, classroom buildings, libraries and the student center. A colorful email was sent to all freshmen in late August, late September, and mid-November. Posts were made on Northwestern’s Facebook group for incoming students. Recruitment tables were set up at an opening week event for club sports, outside dorm areas and dining halls, and in the student center. Further recruitment efforts later in the quarter included placing postcards in every freshman mailbox, contacting dorm advisors, peer advisors and professors teaching freshmen seminars, and placing advertisements in the university newspaper, on monitors in the student center and on all tables in all dining halls.

Recruitment materials directed students to a study website with a link to a website to fill out a screening survey. Eligible students were sent an email with an informational video and a link to the study consent. Once a student completed informed consent another email was sent with instructions for accessing an online baseline survey. Once the survey was completed, participants were sent a link to an online scheduling site to schedule an in-person health assessment. Reminders were sent via email or phone call if participants did not complete the study survey or schedule a health assessment after they had consented.

Participants were asked to attend an in-person session to have height, weight, blood pressure, cholesterol, glucose, and carbon monoxide levels measured. Additionally, participants were asked to download and learn how to use a smartphone application customized to their randomized group. Participants were asked to attend another in-person health assessment at the beginning of their sophomore and junior years. To incentivize use of the app, participants were asked about their four target health behaviors each week and sent a university logo item (swag) for each month that they completed every question. To incentivize longer term health behaviors, participants were told that they would receive one raffle ticket for each health behavior they kept at an ideal level to be put into a drawing for $150 their sophomore year and $250 their junior year.

The NUYou study cluster randomized to intervention by dormitory. Those students living in dorms randomized to the CVH intervention received an app that allowed participants to monitor four health behaviors: diet, physical activity, weight, and smoking. Those students living in dorms randomized to the Whole Health (WH) intervention received an app that allowed participants to monitor four non-CVH behaviors: hydration, sun protection, safe sex, and safe transportation. Lastly, each group was invited to a secret Facebook group where a daily posting regarding one or more of the assigned behaviors was presented and participants would have an opportunity to comment, discuss, like, and share regarding their target health behaviors.

Interim recruitment and retention results

Although the project proposed to recruit 500 out of 1,985 incoming freshmen during the fall quarter, the study had to cut off recruitment in December of 2016 at only 150 participants. In January and February of 2016, recruitment, app use, and completion of weekly behavior queries were examined. In both months, 44 out of 150 (29%) of participants had used the app during the month. During the same months, 15% and 20% respectively had answered all four weekly behavior queries to receive the swag incentive. Thus, a plan to develop and evaluate recruitment and retention methods in two phases was initiated.

Phase I

Methods

Implementation of a retention protocol

Beginning in March of 2016, a retention protocol was implemented based on a participant’s app use. After 1 week of no app use, participants were sent a text. If app nonuse continued, a second text was sent the following week. Continued nonuse resulted in a phone call the third week, an email the fourth week and a postcard the fifth week. Other retention tactics included email greetings for birthdays, holidays, and significant NU events.

Recruitment surveys

All Northwestern University freshmen who had not filled out the screening survey for the NUYou Research study (group A), and those who had been screened but not randomized (group B), were sent an email asking them to complete an online consent and a survey in REDCap (15) about what they remembered about recruitment efforts for the NUYou study, what interested them about the study, and what reasons they decided not to participate. Two reminder emails were sent a week apart. Participants were first asked whether they remembered seeing any of the recruitment tactics used. Images of each tactic were included with each question. Multiple choice questions asked about how participants heard about study, what they remembered about the study, what deterred them from joining, what interested them in the study, and what would have motivated them to sign up. Participants in group B, who had filled out the screener and been sent a consent form and video which provided details about the study requirements, were additionally asked which requirements deterred them from continuing with the study. Group B was also asked about their perceptions regarding accessing the consent and health survey. Participants who signed the consent and filled out the health questionnaire, but did not continue further, were asked their opinion about the health questionnaire and the follow-up contact from study staff. Open-ended questions asked about the health questionnaire, and what would have made participation more appealing and easier. Participants were incentivized to complete the survey with a swag item of their choice.

Usability surveys

All participants enrolled in the NUYou study were sent an email asking them to complete an online consent and survey in REDCap (15) about the usability and usefulness of the smartphone application. Participants were asked about their satisfaction with function and design, the motivating value of the app, the effectiveness of the app, their perception of usefulness, and their perceived improvement in health behaviors. Additionally, participants were asked to rate possible features that they would be interested in incorporating in a future app update of the app. Participants were incentivized to complete the survey with a swag item of their choice.

Analysis

Survey results were visualized using RedCap’s functionalities and used to determine changes to be made for the remainder of the trial.

Results

Retention protocol implementation

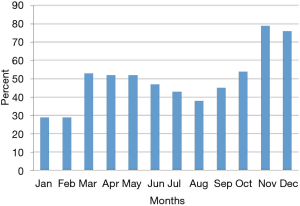

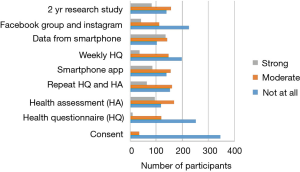

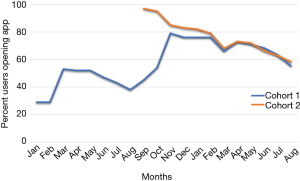

Efforts implemented in March, 2016 resulted in an increase from 29% to 53% of participants using the app (Figure 1).

Recruitment

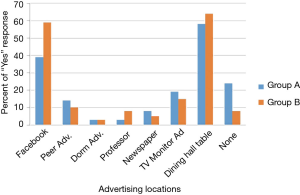

A total of 366 participants out of 1,683 possible participants in group A, and 39 out of a possible 133 participants in group B, provided informed consent and answered the survey. More participants remembered hearing about the study through dining hall advertisements than through other advertising methods. Facebook was the second most remembered advertising tactic (Figure 2).

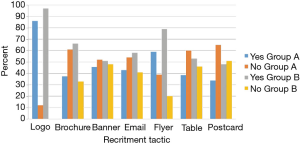

The NUYou logo was remembered by an average of 87% of the survey respondents across both groups (Figure 3). The flyers posted around campus were also well remembered, with recall rates of 59% for group A and 79% for group B. The brochure that was mailed to all the incoming freshmen was remembered by only 30% of freshmen who did not fill out the web screener but 66% of those who did. Emails, postcards and the banner, despite being hung for only 1 week, were also somewhat memorable. Newspaper and student center monitor advertisements were not memorable, despite their high cost.

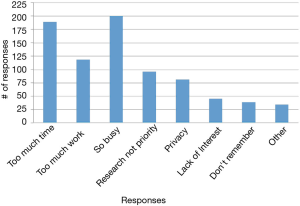

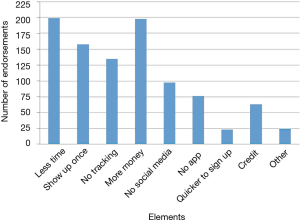

The reasons students chose most frequently for not signing up were “too busy,” “too much work,” and “study took too much time” (Figure 4). In the open-ended questions asking about what kept participants from continuing with the study or what would have made participation easier, “blood draw” was a recurring identified deterrent even though the “blood draw” was actually a fingerstick. Other moderate to strong deterrents were data collection from phone, using a smart phone app, the in-person health assessment and the length of the study (Figure 5). Students indicated that less commitment, less time required and more money would have motivated them to join the study (Figure 6).

Usability

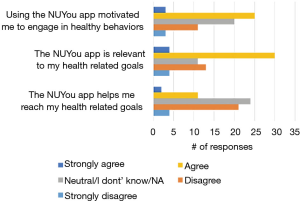

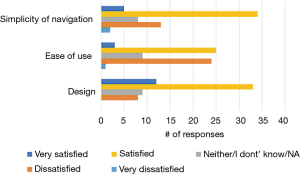

Among the 150 NUYou participants 65 completed the usability survey (38/77 in CVH and 27/71 in WH). There were mixed results regarding the satisfaction with features. Although most indicated that the app was relevant to their health goals (52%), less than half (43%) indicated that it was motivating, and only 21% agreed that the app helped them reach health goals (Figure 7). However, participants had positive impressions regarding the usability of the app. Most were satisfied or very satisfied with the design and the simplicity of navigating the app. Participants were generally neutral or satisfied with the ease of use (Figure 8). Some participants (n=34) endorsed wanting a gamification aspect added to the smartphone application. When asked what features they would like to see added, free text responses included requests to add other health tracking features, features related to stress, connection or integration to a wearable device and most specifically, a water counter.

Phase II

Methods

Changes to recruitment

In year 2 of the NUYou research study, a second cohort of participants (cohort 2) was recruited. Recruitment strategies were trimmed to focus effort on the memorable strategies. Flyers went up on all residence hall floors during the welcome weekend for freshmen. Advertisements on dining hall tables were placed in the second week and remained there for 1 month. Recruitment table efforts were concentrated in the first 2 weeks. Emails were sent the second week of the quarter, again in October and twice in November. Postcards were mailed the second week. A summary of the study components was simplified in recruitment materials (e.g., “fingerstick” and “blood” replaced with glucose and cholesterol tests). Furthermore, study enrollment steps were consolidated such that a participant could receive an email, click through to the website, complete a consent and baseline survey, and schedule an appointment in a single step. Cohort 1, before returning for follow-up health assessments, were also emailed a summary of changes to the study as well as links to the study survey and scheduling software.

Changes to the smartphone application

For the Fall of 2016, changes were made to the study application based on the usability survey results. An in-app game was added for the dual purpose of providing a fun activity, but also a crude measure of cognitive performance, something of interest to students. To provide more useful features for the WH group in particular, a self-monitoring feature for hydration was added. As participants expressed concern about their emotions, additional weekly questions and daily self-monitoring features were added regarding stress and happiness levels. Rather than yes/no answers, responses were made on a slider with five levels of response. Finally, all participants were given feedback about their behaviors, stress, happiness, and game performance in a consolidated graph to enhance the ability to see connections between health behaviors, emotions, and cognitive performance.

Changes to incentive structure

As indicated by participants, many had forgotten or were not motivated by the long-term raffle incentive structure of the study. Thus, two significant changes were made. To both incentivize participants attending the in-person session and to facilitate easier tracking of at least one health behavior, all participants were given a wireless tracking device. The CVH group was given a Fitbit Zip (Fitbit, Inc., San Deigo, CA, USA) to track physical activity and the WH group was given a HydraCoach (HydraCoach, Irvine, CA, USA) to track the amount of water consumed.

To incent greater use of the app and secret Facebook groups, a loss aversion incentive structure was implemented (16). The app was modified to include a page that showed a bank wherein each participant started with $110. Participants can lose $0.10 for each day they do not open the app, $0.10 for each day they do not track some behavior in the app, and $0.70 for each week they do not post in the secret Facebook group. The app page keeps a running tally of each non-action and corresponding money loss as well as a balance remaining. At the next annual in-person visit, the participant will then receive the amount left in their bank.

Changes to the retention protocol

Use of the mobile app, as well as adherence to answering weekly questions was tracked. After 1 week of no app use all participants were sent a text. If app nonuse continued an email was sent the following week. A phone call was attempted the third week of no use, a Facebook message the fourth week, and a postcard the fifth week. Participants who did not answer the weekly questions every week were sent a reminder at the beginning of the next month. General reminders to answer questions were also posted on Facebook at the beginning of each month.

Analysis

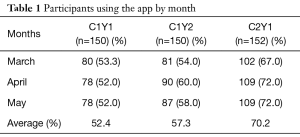

Recruitment for cohort 2 was compared to cohort 1 in terms of how many participants could be recruited and in what time frame. Retention was examined over time within cohort 1 and compared between cohorts. Average app use and response to weekly behavior queries was evaluated between the months of March and May by comparing cohort 1 year 1 (C1Y1) to cohort 1 year 2 (C1Y2) and cohort 1 year 1 (C1Y1) to cohort 2 year 1 (C2Y1) using tests of proportions.

Results

Recruitment for cohort 2

Recruitment materials were each given a different traceable website link. This allowed staff to follow which recruitment materials were effective in getting students to the website without having to survey the participants again. A total of 1,998 freshmen entered Northwestern in the fall of 2016. Of those, 257 students read the REDCap consent form, 227 consented to the study, and 153 enrolled during a 2-month time period, compared to the 3-month time period it took to recruit cohort 1.

Emails, sent to all incoming freshmen, were the most effective recruitment tool to get participants to the website. The first email brought 101 potential participants to the website. Forty-two of those continued to the consent form on REDCap. The mailed postcards and posted flyers were the most successful of the non-email recruiting tactics. Though the dining hall table top advertisement was one of the most memorable recruiting tools used for cohort 1, it was not the most effective tool for cohort 2.

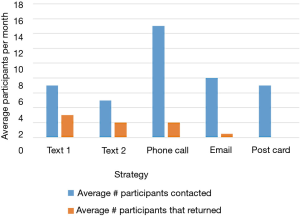

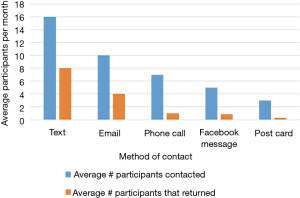

Retention

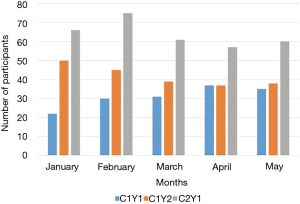

Despite having less than 40% of participants actively using the app by the end of the summer, 123 out of 150 (82%) of participants in cohort 1 completed their follow-up health assessments and study survey. App use rebounded to almost 80% by November after follow-up assessments when cohort 1 received the updated app (Figure 9). Figure 10 illustrates the average number of participants contacted via each retention strategy and the average number that responded by going back to app use each month. Though average number of contacts made in a month had not changed from the first to second iteration of the retention protocol, participants were returning earlier and in greater numbers in the most recent protocol iteration (Figure 11). As shown in Table 1, there was no significant difference in average app usage between years 1 and 2 for cohort 1 (z=1.202, P=0.23). But average app usage in cohort 2 during the first year is better than that of cohort 1 in the first year (difference of 17.8%, z=3.176, P=0.0015).

Full table

As illustrated in Figure 12, weekly behavior query responses improved for cohort 1 in year 2 as compared to year 1 (z=1.988, P=0.049). Behavior query responses was better for cohort 2 in year 1 as compared to cohort 1 (difference= 21.3%, z=3.97, P=0.0001).

Discussion

mHealth trials present similar and unique challenges to participant recruitment and retention compared to traditional clinical trials. However, technology can also provide new opportunities for investigating and improving clinical trial research. In the present investigation, recruitment, use of the app, adherence to the study protocol, and retention in the trial was examined during the study and found to be below expectations. An evaluation followed by improvements to the retention protocol, recruitment strategies, and the smartphone application were made during the trial. Herein we evaluated the outcome of the changes made and demonstrated quicker accrual of the same sample size, higher use of the app, and higher compliance with completing weekly questions about behavior when comparing within and between cohorts. Furthermore, fears that those who had discontinued use of the app would not return for follow-up visits were unfounded. Despite seeing less than 40% of participants using the app in the month prior to the annual in-person visit, 82% of participants returned to be assessed.

While changing of methods may not be common, and even be frowned upon, during the course of a clinical trial, it is important to note that none of the core methods of the intervention, randomization structure, or primary outcome measures were changed, preserving the ability to carry out the primary aims of the trial. Instead, by focusing on protocols and processes that are peripheral but crucial to trial management, we were able to optimize our systems and produce more efficient recruitment of a second cohort and make significant improvements in engaging our participants in the intervention as delivered through our smartphone application.

There are some important lessons learned from this evaluation. Regarding recruitment, we were surprised to learn that the most memorable recruitment strategy, table top advertisements, in the first year, did not yield our highest enrollment in year 2. We surmise that this might be a result of the most memorable method as captured by a survey may not necessarily be the most successful strategy as shown by the bit.do links. Thus, we have determined it is critically important that clinical trials make strong attempts to create unique links for each recruitment strategy to learn which strategies are most successful and which are most cost efficient. In our case, table top advertisements were costly and may have been eliminated without significant detriment.

Interpretation of recruitment materials also appeared to be a critical element in a student’s likelihood of moving through the enrollment process. Despite having students co-create these recruitment materials, we found many potential participants had misconceptions about the study components in the first year. Varying recruitment material content and wording during the development phase and directly assessing participant interpretations and recall of study elements may have prevented these misconceptions.

The study application was initially co-created and designed with students based on formative work that primarily consisted of focus groups. Despite students indicating that they would use certain features of the app, the results of our app use data in the initial months of the trial indicated that this was not the case. Further surveys revealed that although motivating, the students did not believe the app was doing much to aid in behavior change. In reviewing previous focus group data and following up with students via survey, it was determined that the health behaviors were not being linked to behaviors and emotions that the students were acutely experiencing such as high stress and desire to perform well academically. Therefore, significant changes to the self-monitoring and feedback features were made to accommodate alternative motivations for engaging in health behaviors. Additionally, outreach by study staff through text messages, phone calls, emails, Facebook messenger, and post-cards resulted in an increase in app use. This may indicate that, consistent with other research, human outreach may be necessary to sustain engagement in remotely delivered mHealth interventions (17).

Finally, it was clear that a long-term incentive structure in which rewards were not salient during the year needed to be changed to incentivize proximal behaviors such as app use and Facebook posts. Improvements shown after implementing the loss aversion technique, consistent with behavioral economics theory (18), could be the single most important strategy responsible for better app use. However, it is interesting that although no incentive for completing weekly questions was changed, we witnessed an increase in answering each week when we compare the years in cohort 1 and the first year between cohorts. This suggests a snowball effect of micro incentives in one element of the mHealth study having a positive effect on adherence to other elements. This snowball effect may warrant further investigation in future studies.

This evaluation is not without its limitations. While we did re-engage some participants back from non-adherence, we are aware that many students did not respond to surveys and that perhaps those students would be the most important from which to get information. Further, while a loss aversion incentive structure appears to be working well in this context, it could be a costly component that is not feasible in many contexts. Finally, in a perhaps ideal environment, we might iterate features of the app more quickly, perform A/B testing, and find optimal features to improve use and compliance for each individual. However, due to cost constraints for this particular project, such was not possible on an ongoing basis.

Researchers, particularly those conducting mHealth clinical trials, should strongly consider systematically tracking and evaluating recruitment and retention methods during their trials. Furthermore, given the flexibility of mobile technologies, researchers could be more flexible and opening to modifications in the strategies deployed during a trial and thereby reduce the threat of poor recruitment and retention.

Acknowledgements

The authors acknowledge the assistance from other study staff in conducting this study, most notably Gleb Iakovlev, JC Subida, Sean Arca, Tiara Adams and Gwen Ledford.

Funding: This work was supported by the American Heart Association Strategically Focused Research Prevention Network (#14SFRN20740001); J.R. Albert Foundation, Inc.; National Institutes of Health’s National Center for Advancing Translational Sciences (UL1TR001422).

Footnote

Conflicts of Interest: The authors have no conflicts of interest to declare.

Disclaimer: The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health.

References

- Fletcher B, Gheorghe A, Moore D, et al. Improving the recruitment activity of clinicians in randomised controlled trials: a systematic review. BMJ Open 2012;2:e000496. [Crossref] [PubMed]

- Bower P, Brueton V, Gamble C, et al. Interventions to improve recruitment and retention in clinical trials: a survey and workshop to assess current practice and future priorities. Trials 2014;15:399. [Crossref] [PubMed]

- Goldberg JH, Kiernan M. Innovative techniques to address retention in a behavioral weight-loss trial. Health Educ Res 2005;20:439-47. [Crossref] [PubMed]

- Fisher L, Hessler D, Naranjo D, et al. AASAP: a program to increase recruitment and retention in clinical trials. Patient Educ Couns 2012;86:372-7. [Crossref] [PubMed]

- Nicholson LM, Schwirian PM, Klein EG, et al. Recruitment and retention strategies in longitudinal clinical studies with low-income populations. Contemp Clin Trials 2011;32:353-62. [Crossref] [PubMed]

- Gul RB, Ali PA. Clinical trials: the challenge of recruitment and retention of participants. J Clin Nurs 2010;19:227-33. [Crossref] [PubMed]

- van der Kop ML, Ojakaa DI, Patel A, et al. The effect of weekly short message service communication on patient retention in care in the first year after HIV diagnosis: study protocol for a randomised controlled trial (WelTel Retain). BMJ Open 2013.3. [PubMed]

- Lane TS, Armin J, Gordon JS. Online Recruitment Methods for Web-Based and Mobile Health Studies: A Review of the Literature. J Med Internet Res 2015;17:e183. [Crossref] [PubMed]

- Becker S, Miron-Shatz T, Schumacher N, et al. mHealth 2.0: Experiences, Possibilities, and Perspectives. JMIR Mhealth Uhealth 2014;2:e24. [Crossref] [PubMed]

- Laws RA, Litterbach EK, Denney-Wilson EA, et al. A Comparison of Recruitment Methods for an mHealth Intervention Targeting Mothers: Lessons from the Growing Healthy Program. J Med Internet Res 2016;18:e248. [Crossref] [PubMed]

- Jacobs MA, Graham AL. Iterative development and evaluation methods of mHealth behavior change interventions. Curr Opin Psychol 2016;9:33-7. [Crossref]

- Dennison L, Morrison L, Conway G, et al. Opportunities and challenges for smartphone applications in supporting health behavior change: qualitative study. J Med Internet Res 2013;15:e86. [Crossref] [PubMed]

- Helander E, Kaipainen K, Korhonen I, et al. Factors related to sustained use of a free mobile app for dietary self-monitoring with photography and peer feedback: retrospective cohort study. J Med Internet Res 2014;16:e109. [Crossref] [PubMed]

- Pellegrini CA, Pfammatter AF, Conroy DE, et al. martphone applications to support weight loss: current perspectives. Adv Health Care Technol 2015;1:13-22. [Crossref] [PubMed]

- Harris PA, Taylor R, Thielke R, et al. Research electronic data capture (REDCap)--a metadata-driven methodology and workflow process for providing translational research informatics support. J Biomed Inform 2009;42:377-81. [Crossref] [PubMed]

- Camerer C. Three Cheers--Psychological, Theoretical, Empirical--For Loss Aversion. J Mark Res 2005;42:129-33. [Crossref]

- Wojtowicz M, Day V, McGrath PJ. Predictors of participant retention in a guided online self-help program for university students: prospective cohort study. J Med Internet Res 2013;15:e96. [Crossref] [PubMed]

- Thorgeirsson T, Kawachi I. Behavioral economics: merging psychology and economics for lifestyle interventions. Am J Prev Med 2013;44:185-9. [Crossref] [PubMed]

Cite this article as: Pfammatter AF, Mitsos A, Wang S, Hood SH, Spring B. Evaluating and improving recruitment and retention in an mHealth clinical trial: an example of iterating methods during a trial. mHealth 2017;3:49.