The development, usability, and reliability of the Electronic Patient Visit Assessment (ePVA) for head and neck cancer

Introduction

Annually, more than 65,000 persons are diagnosed with head and neck cancer in the United States (US) (1). Head and neck cancers arise from the oral cavity, pharynx, larynx, nasal cavity, paranasal sinuses, and salivary glands. The treatment of head and neck cancer is determined by the specific site of disease, stage of cancer, and pathologic findings (2). In general, 30% to 40% of those diagnosed with early stage head and neck cancer receive single-modality treatment of surgery or radiation therapy, whereas 60% of those diagnosed with locally or regionally advanced disease receive combined therapy of surgery, radiation, and chemotherapy (2). The survival rate for head and neck cancer has steadily improved over the past 20 years; nearly 2/3 of patients diagnosed with head and neck cancer will live five years (3). At the same time, the treatment has intensified, increasing the symptom burden from cancer and its treatment. During treatment, up to 50% of patients become severely symptomatic with pain, fatigue, mouth sores, and excessive oral mucus (4). Consequently, patients’ ability to perform daily activities and to interact socially is impaired, leading to treatment interruptions, such as skipping treatment or the need to decrease therapeutic doses of chemotherapy or radiotherapy or even premature termination of treatment. A retrospective study of a symptom management program for patients undergoing combined chemotherapy and radiation therapy showed that approximately 20% of participants had unexpected hospitalizations related to symptoms due to treatment toxicity and 25% had chemotherapy dose reductions (5). Long term complications from head and neck cancer and its treatment are lymphedema, fibrosis, dysphagia, and musculoskeletal impairment (6), which can lead to declined ability to perform daily activities and interact socially, resulting in decreased quality of life.

An ongoing, pragmatic, clinically useful assessment is needed to evaluate patients’ symptoms and functional limitations in head and neck cancer to aid in identification of patients who are at risk for delayed or premature termination of treatment, hospitalizations, and long-term functional limitations due to late and long-term symptoms. A pragmatic assessment is defined as a simple touch screen questionnaire that patients can complete in less than 10 minutes while waiting to see their healthcare providers during clinic visits with a goal to minimize patients’ burden. To address this need for a clinically useful assessment, we developed the Electronic Patient Visit Assessment (ePVA) that enables patients to report 42 symptoms related to head and neck cancer and 17 limitations of functional status. This manuscript reports (I) the development of the ePVA, (II) the content validity of the ePVA, and (III) the usability and reliability of the ePVA. The ePVA was developed and tested using an iterative process to achieve an efficient, satisfactory experience for the end users.

Methods

Phase I: development of the ePVA

Theoretical framework

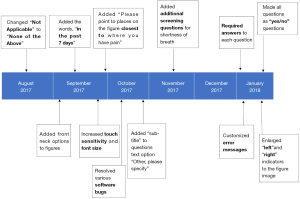

In developing the ePVA, the Theory of Unpleasant Symptoms guided the characterization of the two key concepts of the ePVA—symptoms and functional status (see Figure 1) (7). This theory conceptualizes the symptom experience as an interaction among symptoms, functional status, and influencing factors that may heighten or diminish symptoms. Symptoms were defined as multidimensional experience of perceived indicators of abnormal biological or physiological changes (8-11). Dimensions that were common across all symptoms were distress, timing, intensity, and quality (7). The theory also depicted that symptoms occur alone or in clusters of co-occurring symptoms, creating an additive or even multiplicative impact for the patient (7). Most important, the Theory of Unpleasant Symptoms portrays functional status as a result of the impact of symptoms. Functional status was defined as the ability to perform activities to meet basic needs, fulfill usual roles, and maintain health and well-being (12). Together, symptoms and functional status influence a person’s quality of life (13).

Rigorous systematic literature review

The first step to developing a pragmatic, clinically useful assessment is to identify valid and reliable instruments. Accordingly, we conducted a rigorous systematic literature search of Health and Psychosocial Instruments, PubMed, CINAHL, and PsycInfo databases to identify the instruments that assessed symptoms and functional status in patients with head and neck cancer (Van Cleave JH, Fu MR, Persky MS, et al., unpublished manuscript). This search identified 80 articles that described studies using 112 instruments to assess symptom and functional status in patients with head and neck cancer published between January 1, 2005 and November 1, 2015. These articles consisted primarily of longitudinal/prospective studies (37 of 80, 46%) or cross-sectional studies (24 of 80, 30%), and included participants diagnosed with head and neck cancer from all sites (50 of 80, 63%).

From this systematic review, we found that valid and reliable quality of life instruments exist, such as the European Organization for Research and Treatment of Cancer Quality of Life Questionnaire-H&N35 (14) or the Functional Assessment of Cancer Therapy-Head and Neck (15). We also found that these instruments had properties that limit their use in head and neck cancer clinical practice and research. For example, many instruments were lengthy, complicated, and intended for general cancer populations, which posed unneeded respondent burden for head and neck cancer patients. The instruments contained assumptions on associations between specific symptoms and functional status limitations (e.g., “I cannot eat because of pain”). These assumptions potentially introduced unintentional bias into outcomes. Moreover, the instruments did not fully capture the long-term symptom experience and functional status limitations after treatment for head and neck cancer.

We also investigated the use of measures from two National Institutes of Health initiatives—the Patient-Reported Outcomes Measurement Information System (PROMIS) Initiative (16) and the Patient Reported Outcomes-Common Terminology Criteria for Adverse Events (PRO-CTCAE) (17,18). These measures consist of psychometrically tested item banks. For the PROMIS item banks, we found that the items contained in these measures lacked head and neck cancer-specific symptom and function items (e.g., speech, dysphagia, oral pain) (16,19). For the PRO-CTCAE items, we found that items were useful for adverse event reporting during clinical trials, but may not capture long term symptoms and functional status limitations that are experienced by patients with head and neck cancer (18). Hence, it was evident that there was a need to develop a pragmatic patient-reported ePVA for early detection of symptom and functional status for clinical and research purposes that patients with head and neck cancer can easily use.

Identification of items

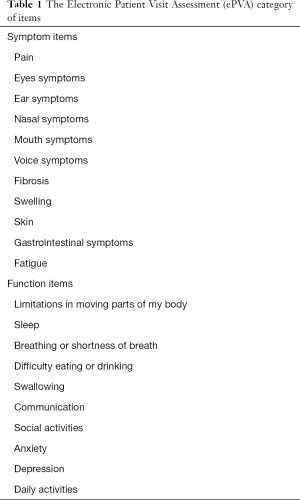

To identify questionnaire items for the ePVA, the study team reviewed the 112 instruments extracted during the literature review to identify key symptoms and functional status items in head and neck cancer. Study team members independently coded questionnaires to identify items, then met to discuss their findings and resolve differences. From this work, 149 symptom and function status codes were identified, then collapsed into 23 categories. From these 23 categories, nominal questionnaire items using Yes/No responses were developed. The goal of this approach was twofold: (I) to identify the specific symptoms and functional status deficits that patients are experiencing; and (II) to generate a numerical sum of patient-reported symptoms and functional status limitations that is representative of the person’s health status (20,21). With the development of questionnaire items, the next step was to assess content validity of the ePVA.

Phase II: content validity testing

We conducted quantitative and qualitative content validity testing. Quantitative content validity testing refers to the assessment of the “degree to which a scale has an appropriate sample of items to represent the construct of interest,”(p. 459) (22). The quantitative content validity test consisted of surveys of ten expert providers or researchers to rate the relevance of each of the ePVA questionnaire items. Expert providers were defined as physicians, nurse practitioners, nurses, physical therapists, speech therapists who had provided direct care for three or more years for patients with head and neck cancer. Expert researchers were defined as persons who have published qualitative or quantitative research studies about head and neck cancer. These providers and researchers rated the relevance of assessment items from 1 (not relevant) to 4 (extremely relevant). Item-Content Validity Index (I-CVI) scores were calculated as the proportion of expert providers in agreement about relevance rating 3 or 4 and adjusted for chance agreement using a modified kappa score (22-24).

To achieve qualitative content validity, we conducted cognitive interviews with 15 expert patients between January 2017 and March 2017 to determine their interpretation of questionnaire items based on their symptom experience. Expert patients were defined as patients 18 years and older with histologically diagnosed head and neck cancer experiencing one or more symptoms or functional status limitations from the tumor and/or the cancer treatment. Participants were recruited during or after completion of chemotherapy, radiation therapy, surgery, or combination of these therapies. Because the literature indicates that gender (25), type of treatment (25,26), and length of time from treatment (27) influence the symptom experience, potential participants were identified by purposive stratified sampling based on gender, type of treatment, and length of time from treatment to capture differing perspectives of the questionnaire items (28). Ultimately, we enrolled 15 participants who were representative of the institution population, where the majority of participants were of the white race (11 of 15), male (9 of 15), and received a range of treatments (immunotherapy alone, chemotherapy alone, surgery alone, radiation therapy or combination of treatments). At the time of the interviews, nine participants were undergoing treatment, while six participants were between 2 months and 5 years from completion of treatment. The interviews consisted of face-to-face semi-structured interview questions with verbal probing to assess participants’ understanding and interpretation of the assessment items of the ePVA. All interviews were recorded, transcribed, and checked for accuracy.

The study team analyzed data from content validity testing using systematic comparison to determine the relevance of each questionnaire item for early detection of symptoms and functional status limitations of patients with head and neck cancer. Questionnaire items with I-CVI modified kappa scores >0.78 (22) or identified as relevant by one or more participants were retained. After this analysis, 21 categories of symptoms and functions remained (see Table 1). In addition, based on recommendations from expert providers, interactive figures were embedded within the ePVA. These figures provided a method for participants to identify the locations of their pain and limited mobility using the touch screen technology. Decisions about questionnaire items were recorded to ensure accurate documentation of the development process. The retained questionnaire items were then entered into a web-based platform, entitled “Touch2Care”. This platform was built using Drupal™ (29), an open source platform for housing questionnaires. After the system was built, the study team explored the system to detect platform usability problems. After the initial review by the study team, we began testing the system with end users—patients with head and neck cancer—to determine the usability and acceptance of the ePVA.

Full table

Phase III: usability and acceptance testing using an iterative process

We tested the usability and acceptance of the ePVA using an iterative process between August, 2017 and December, 2017. Usability evaluation assesses the extent to which a user interface meets five Nielsen’s principles for usability—learnability, efficiency, memorability, errors, and satisfaction (30). The underlying principle of usability is that performance of tasks and procedures should be structured in a logical and consistent manner for human-computer interaction to be effective (31). To evaluate acceptance, the participants answered two short surveys—The Perceived Ease of Use and Usefulness Questionnaire, and the Post Study System Usability Questionnaire—to rate their satisfaction with ease of use, usefulness, and usability of the ePVA. The study participants, iterative process, and study procedures are described below.

Study participants

After approval of the study by the Institutional Review Board, we recruited participants from a pool of potential subjects that was representative of the future end-users— patients with head and neck cancer. Strategies to protect human subjects were ensured by following the guidelines set forth by the Institutional Review Board that approved this study. Potential participants received detailed information about the study, its investigational nature, the required study procedures, alternative treatments, and risks and potential benefits of the study. The Principal Investigator and researchers were available for potential participants to answer any questions.

Ultimately, we enrolled 30 participants, a number sufficient for usability (32), from an academic medical center in northeastern United States. The participants were 18 years and older with head and neck cancer who had various experiences with computers or touch screen technology. Participants were recruited before, during, or after cancer treatment (surgery, chemotherapy, radiation therapy, immunotherapy, or some combination of these treatments). Because the ePVA was in developmental stage, all participants were English speaking. All participants provided written informed consent. After signing the informed consent, participants were asked to complete the ePVA using a touch screen digital device while thinking aloud about problems that they encountered.

Iterative process

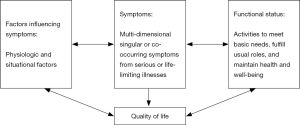

The iterative process consisted of refining the ePVA multiple times based on participants comments during “think aloud” sessions and narrative responses (see Figure 2). The “think aloud” method generates direct data representative of the ongoing thought processes of participants as they complete the ePVA (31,33). It is thought that the “think aloud” method ultimately results in fewer rounds of revision of the ePVA program compared to other usability testing approaches, while achieving an efficient and acceptable end user experience (31).

Participant comments were reviewed after each interview, and changes were made to the ePVA before conducting additional interviews. A variety of refinements were made—for example, during the initial think aloud sessions, participants commented on the need to clarify the wording of question items. Participants also asked for specification of the time period for reporting of symptoms. Because research supports that a one week recall period provides a sufficiently accurate representation of daily events (34), the words “in the past 7 days” were placed before each question stem. During this period, software “bugs” were identified and resolved, such as ensuring that all screens began at the top of the page. With this correction, users learned to consistently scroll down to answer each questionnaire item on the page. To tailor the questionnaire to the individual symptom experience, conditional questions were added to the survey. For example, if participants reported shortness of breath, then they would be asked additional questions to numerically rate their current breathing status and to assess whether their breathing was better, worse, or about the same over the past 7 days (35). Those participants who did not report shortness of breath would not see these additional questions. The iterative refinements also improved the participants’ ability to navigate the touch screen technology. For instance, we found that participants undergoing chemotherapy had difficulty with touch sensation. Therefore, the touch screen’s sensitivity was enhanced. Participants would also commit errors such as attempting to navigate to the next screen before completing all required questions. Thus, the program was changed to mandate that all questions were answered before participants could navigate to the next screen. Customized error messages were then implemented to inform participants of any errors. The goal of these refinements was to improve the ePVA’s information quality, system usability, precision assessment, and system interface to provide a satisfactory experience for the end users.

Study procedures

After finishing the ePVA, participants completed a heuristic evaluation checklist to rate the usability of the system (no usability problem, minor usability problem, major usability problem, and usability catastrophe). The participants also answered two short surveys—The Perceived Ease of Use and Usefulness Questionnaire, and the Post Study System Usability Questionnaire—to rate their satisfaction with ease of use, usefulness, and usability of the ePVA. Finally, the participants underwent short narrative interviews, answering questions such as “What do you like about the ePVA?” and “How can we improve the system?”. All patient interviews were audio recorded, transcribed, and checked for accuracy.

Phase IV: reliability and validity testing

Using data from the usability study, internal consistency of the ePVA and its subscales was assessed using Kuder and Richardson Formula 20 reliability measure, an adaptation of Cronbach’s alpha for nominal data (36) The target goal was an internal consistency score >0.70. The convergent validity of the ePVA and its subscales were determined using correlation analysis with the European Organization for Research and Treatment of Cancer (EORTC) QLQ-C30 global QoL/health scale data that were collected as part of the usability study. The hypothesis for convergent validity was that the sum of patient-reported symptoms and functional status limitations collected with the ePVA would be negatively correlated with the EORTC QLQ-C30.

Evaluation instruments

Demographic information

Each participant completed a short questionnaire regarding their demographic information, English capability and computer/internet experience and use.

The Perceived Ease of Use and Usefulness Questionnaire

This instrument was used to evaluate the participants’ satisfaction with the ease of use of the ePVA (32,37,38). This questionnaire is an eight-item scale that evaluates users’ acceptance of a new information system. The theoretical basis for the use of this questionnaire for this study was that perceived ease of use and usefulness are two key determinants of whether individuals will adopt new technology. The questionnaire consisted of a Likert scale ranging from 1 (strongly agree) to 5 (strongly disagree). This questionnaire has demonstrated reliability and validity in evaluation of perceived usefulness and ease of use of information technology (37). The questionnaire has been successfully used to evaluate the usability of a patient-centered, web-and-mobile-based educational and behavioral health IT system focusing on self-care strategies for lymphedema symptom management (32). For this study, a modified version of the questionnaire was used to evaluate the ePVA. The Cronbach’s alpha for this current study’s modified version was 0.82, demonstrating acceptable reliability.

The Post Study System Usability Questionnaire

This instrument was used to assess participants’ satisfaction. The questionnaire is an 11-item Likert scale developed at IBM to subjectively measure the user’s satisfaction with a technology system. The scale consists of a Likert scale ranging from 1 (strongly agree) to 7 (strongly disagree) (39). The questionnaire has demonstrated reliability and validity to assess user satisfaction with IBM technology (39). The questionnaire has been successfully used to assess user satisfaction with web-and-mobile-based educational and behavioral health IT system focusing on self-care strategies for lymphedema symptom management (32). For this current study, we used a modified 11-item scale to assess three concepts of participants’ satisfaction with the ePVA-system usefulness, interface quality, and information quality. The Cronbach’s alpha for this adapted scale for this current study was 0.79, demonstrating acceptable reliability.

European Organization for Research and Treatment of Cancer (EORTC) Quality of Life Questionnaire (QLQ) general (C30) questionnaire v3.0 (40)

The EORTC QLQ-C30 is a 30 item questionnaire consisting of questions regarding symptoms, function, perceived financial difficulties, general health, and global quality of life. For this study, quality of life was measured using the global QoL/health scale of the EORTC QLQ-C30. This subscale consists of two items that ask patients to rate their overall health and overall quality of life on a scale from 1 (very poor) to 7 (excellent). These scores are transformed linearly to range between 0 to 100, with higher scores representing higher quality of life. In a study of patients with non-resectable lung cancer, the global QoL/health scale has demonstrated good internal reliability (Cronbach’s alpha =0.86–0.89) and clinical validity by discriminating between patients with good and poor performance status and weight loss (40).

Data analysis

Descriptive statistics, consisting of means and frequencies, were calculated using SAS® 9.4. Analysis of participant interviews was conducted using a directed content analysis approach, where initial coding categories were based on key concepts from previous research categories (41). Throughout all phases of coding, two study team members worked independently to evaluate each transcript. Group meetings were held to discuss findings and resolve differences. Reliability and validity during the qualitative analysis were ensured through group coding of themes, recording of meeting minutes, and memos of decisions.

Results

Participant characteristics

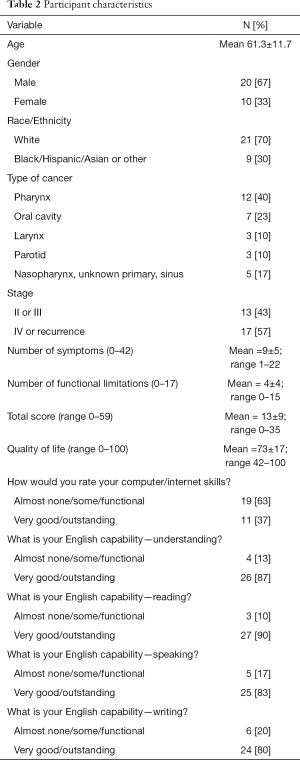

Thirty participants completed the ePVA and evaluation questionnaires (see Table 2). The participants who completed the ePVA were representative of the population of the study institution and of those diagnosed with head and neck cancer. The mean age was 61.3±11.7. The population was primarily male (67%), white (70%), had post high school education (81%), and were diagnosed with oral cavity or pharyngeal cancer (63%). Most participants were diagnosed with stage IV or had recurrent cancer (57%). A little over half (53%) were undergoing chemotherapy, radiation therapy, or a combination of treatments at the time they completed the ePVA. The majority of the study participants reported almost none, some, or functional computer skills (63%, 19 of 30).

Full table

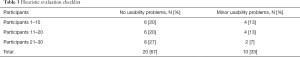

Heuristic evaluation checklist

Among the 30 participants, 10 (33%) reported minor usability problems (see Table 3). No participants reported major usability problems or usability catastrophes. The heuristic evaluation also demonstrated that the iterative process of refinement of the ePVA resulted in fewer participants reporting usability problems over the course of the study. By the last three participants, no usability problems were reported.

Full table

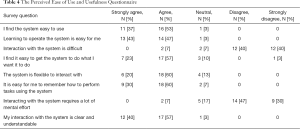

End-user acceptance evaluations

Participant responses to The Perceived Ease of Use and Usefulness Questionnaire (see Table 4) demonstrated that 90% of participants strongly agreed or agreed that the ePVA was easy to learn and easy to use (strongly agree: 37%, 11 of 30; agree: 53% 16 of 30). Further, 97% of participants strongly agreed or agreed that their interaction with the system was clear (strongly agree: 40%, 12 of 30; agree: 57%, 17 of 30).

Full table

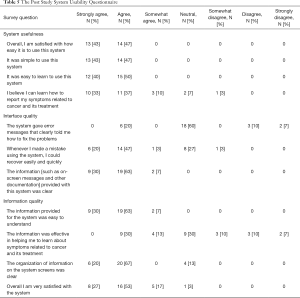

In response to the Post Study System Usability Questionnaire (see Table 5), 90% of participants agreed or strongly agreed that they were satisfied with how easy it was to use the system (strongly agree: 43%, 13 of 30; agree: 47%, 14 of 30). In addition, the participants identified that the ePVA could be used as a method to report their symptoms, where 70% of participants strongly agreed or agreed that they could learn how to use the ePVA to report their symptoms (strongly agree: 33%, 10 of 30; agree: 37%, 11 of 30).

Full table

Qualitative findings

Three main themes emerged from the qualitative data analysis. Reflecting the responses to the evaluation questionnaires, the three themes were the simplicity of the ePVA, the ease of use of the ePVA, and the potential use of the ePVA for patient advocacy for their needs (see Table 6 for representative quotes).

Full table

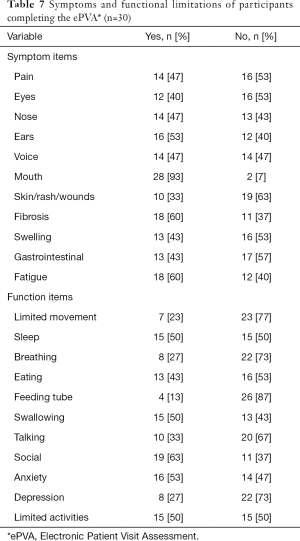

Symptoms and functional limitations

The analysis of the data generated by the ePVA found that the ePVA could capture symptoms and functional status limitations that are common in head and neck cancer (see Table 7). The most prevalent symptoms reported by participants were mouth symptoms (93%), fibrosis (60%), and fatigue (60%). The most prevalent functional status items reported by participants were anxiety (53%), difficulty sleeping (50%), swallowing (50%), and limited activities of daily living (50%).

Full table

Reliability and validity testing

The symptom and functional status subscales of the ePVA demonstrated good reliability with internal consistency measurement scores of 0.82 and 0.85, respectively. As hypothesized, the analyses found that the number of patient-reported symptoms and functional status limitations collected with the ePVA were significantly and negatively correlated with the EORTC QLQ-C30 global QoL/health scale (r=−0.55038, P<0.01), supporting the convergent validity of the ePVA (see Table 8).

Full table

Discussion

Patients with head and neck cancer suffer distressing symptoms that lead to poor quality of life. The findings from this study support that the ePVA is a reliable, valid measure that study participants found simple and easy to use. Further, from both surveys and interviews, participants underscored that the ePVA could serve as a useful tool to report their symptoms and functional status from head and neck cancer and its treatment.

This use of the ePVA as a tool for patients to report symptoms and functional status holds great potential to achieve early detection of and intervention for symptoms with potential to not only prevent interruption or premature termination of treatment, but also to improve quality of life for patients with head and neck cancer. Because of the improved survival rate in the head and neck cancer population, a growing number of persons with head and neck cancer are at risk of living a life time with symptoms and functional limitations from the cancer and its treatment. These symptoms and functional limitations can cause disability, decrease quality of life, and lead to premature death. Yet, because of the site of the cancer, patients with head and neck cancer may encounter greater barriers to communicating their needs than individuals with other types of cancer. Consequently, symptoms and functional limitations may go undetected (42). With the rapid innovations in technology, patients can now complete web-based questionnaires at home or in the office. This information can be sent electronically to providers and used to enhance communication between providers and patients during office visits. In this manner, the ePVA can be used for early detection and intervention for advanced, escalating symptoms to improve quality of life and increase length of survival (42-44).

The simplicity and ease of use of the ePVA makes it a useful tool for research in head and neck cancer and mHealth. The ePVA can be used for longitudinal studies of the symptom trajectories experienced by patients with head and neck cancer to detect the critical time points for interventions to prevent chronic advanced symptoms and long-term disability. Further, the ePVA can be used to determine the impact of symptom trajectories on function, treatment interruptions, and health resource use in patients with head and neck cancer. For mHealth studies, the ePVA can be used to evaluate how best to integrate web-based patient-reported outcomes into electronic health records (45). Further, the ePVA can be used in studies that determine patients’ desires of how and when to share their data from web-based applications.

Strengths and limitations

This study contributes to the mHealth literature by demonstrating the successful implementation of usability and acceptance testing of the ePVA that engages patients and healthcare providers in the process of developing and testing mHealth technology. The other strengths of the study include its strong theoretical base and evidence from a rigorous comprehensive literature review. Finally, the use of an iterative process to refine a web-based patient-reported symptom and function assessment is a great strength. We believe that this iterative refinement during the usability testing provided a more efficient process in the development of the ePVA, ultimately requiring fewer patient interviews to produce an acceptable experience for patients with head and neck cancer. In addition, this approach enabled us to incorporate the patients’ needs and desires into the design of the ePVA, which will facilitate adoption of the ePVA for clinical use.

This study also has limitations. Although the study population does include some patients from the public hospital associated with the institution, this study should be considered a single-site study. Further, the majority of the study population consisted of an educated population with functional or good computer skills. Thus, the generalizability of the study findings may be limited.

Conclusions

Results from the three phases of developing and testing the ePVA demonstrate that the ePVA is easy to use, accepted by patients with satisfaction, and demonstrates acceptable reliability and convergent validity. Future research studies include using data generated by the ePVA to determine the impact of symptom trajectories on functional status, treatment interruptions and terminations, and health resource use in head and neck cancer. Future research using the ePVA should also identify longitudinal trajectories of symptoms in head and neck cancer and determine the impact of symptom trajectories on quality of life in patients with head and neck cancer.

Acknowledgments

Funding: This project was supported in part by the NYU Research Challenge Fund Program, the Hartford Change AGEnts Initiative (supported by a grant to the Gerontological Society of America from the John A. Hartford Foundation), The Palliative Care Research Cooperative Group funded by the National Institute of Nursing Research (U24NR014637), the NYU Meyers Rudin Pilot Project Award, and the NYU Goddard Award.

Footnote

Conflicts of Interest: The authors have no conflicts of interest to declare.

Ethical Statement: The authors are accountable for all aspects of the work in ensuring that questions related to the accuracy or integrity of any part of the work are appropriately investigated and resolved.

References

- Siegel RL, Miller KD, Jemal A. Cancer statistics, 2019. CA Cancer J Clin 2019;69:7-34. [Crossref] [PubMed]

- (NCCN) NCCN. Head and Neck Cancers Version 1.2019. 2019.

- National Cancer Institute. Surveillance, epidemiology, and end results program. 2019. Available online: https://seer.cancer.gov/statfacts/html/oralcav.html

- Rosenthal DI, Mendoza TR, Fuller CD, et al. Patterns of symptom burden during radiotherapy or concurrent chemoradiotherapy for head and neck cancer: a prospective analysis using the University of Texas MD Anderson Cancer Center Symptom Inventory-Head and Neck Module. Cancer 2014;120:1975-84. [Crossref] [PubMed]

- Mason H, DeRubeis MB, Foster JC, et al. Outcomes evaluation of a weekly nurse practitioner-managed symptom management clinic for patients with head and neck cancer treated with chemoradiotherapy. Oncology Nursing Forum 2013;40:581-6. [Crossref] [PubMed]

- Murphy BA, Deng J. Advances in Supportive Care for Late Effects of Head and Neck Cancer. J Clin Oncol 2015;33:3314-21. [Crossref] [PubMed]

- Lenz ER, Pugh LC, Milligan RA, et al. The middle-range theory of unpleasant symptoms: An update. ANS Adv Nurs Sci 1997;19:14-27. [Crossref] [PubMed]

- Cleeland CS, Bennett GJ, Dantzer R, et al. Are the symptoms of cancer and cancer treatment due to a shared biologic mechanism? A cytokine-immunologic model of cancer symptoms. Cancer 2003;97:2919-25. [Crossref] [PubMed]

- Feinstein AR. Symptoms as an index of biological behaviour and prognosis in human cancer. Nature 1966;209:241-5. [Crossref] [PubMed]

- Fu MR, McDaniel RW, Rhodes VA. Measuring symptom occurrence and symptom distress: Development of the symptom experience index. J Adv Nurs 2007;59:623-34. [Crossref] [PubMed]

- Pugliano FA, Piccirillo JF, Zequeira MA, et al. Symptoms as an index of biologic behavior in head and neck cancer. Otolaryngology-Head and Neck Surgery 1999;120:380-6. [Crossref] [PubMed]

- Leidy NK. Functional status and the forward progress of merry-go-rounds: Toward a coherent analytical framework. Nurs Res 1994;43:196-202. [Crossref] [PubMed]

- Cleeland CS, Sloan JA. ASCPRO Organizing Group. Assessing the Symptoms of Cancer Using Patient-Reported Outcomes (ASCPRO): Searching for standards. J Pain Symptom Manage 2010;39:1077-85. [Crossref] [PubMed]

- Bjordal K, Hammerlid E, Ahlner-Elmqvist M, et al. Quality of life in head and neck cancer patients: Validation of the European Organization for Research and Treatment of Cancer Quality of Life Questionniare-H&N35. J Clin Oncol 1999;17:1008-19. [Crossref] [PubMed]

- List MA, D’Antonio LL, Cella DF, et al. The Performance Status Scale for head and neck cancer patients and the Functional Assessment of Cancer Therapy - Head and Neck Scale. Cancer 1996;77:2294-301. [Crossref] [PubMed]

- Cella D, Riley W, Stone A, et al. The Patient-Reported Outcomes Measurement Information System (PROMIS) developed and tested its first wave of adult self-reported health outcome item banks: 2005-2008. J Clin Epidemiol 2010;63:1179-94. [Crossref] [PubMed]

- Bennett AV, Dueck AC, Mitchell SA, et al. Mode equivalence and acceptability of tablet computer-, interactive voice response system-, and paper-based administration of the U.S. National Cancer Institute's Patient-Reported Outcomes version of the Common Terminology Criteria for Adverse Events (PRO-CTCAE). Health Qual Life Outcomes 2016;14:24. [Crossref] [PubMed]

- National Cancer Institute. Division of Cancer Control & Population Sciences. Patient-reported outcomes version of the Common Terminology Criteria for Adverse Events (PRO-CTCAE™). 2019. Available online: , accessed June 8 2019.https://healthcaredelivery.cancer.gov/pro-ctcae/

- Northwestern University. HealthMeasures: PROMIS. 2019. Available online: , accessed June 8 2019.http://www.healthmeasures.net/index.php?option=com_content&view=category&layout=blog&id=147&Itemid=806

- Fu MR, Axelrod D, Cleland CM, et al. Symptom report in detecting breast cancer-related lymphedema. Breast Cancer (Dove Med Press) 2015;7:345-52. [Crossref] [PubMed]

- Van Cleave JH, Egleston BL, McCorkle R. Factors affecting recovery of functional status in older adults after cancer surgery. J Am Geriatr Soc 2011;59:34-43. [Crossref] [PubMed]

- Polit DF, Beck CT, Owen SV. Is the CVI an acceptable indicator of content validity? Appraisal and recommendations. Res Nurs Health 2007;30:459-67. [Crossref] [PubMed]

- Lynn MR. Determination and quantification of content validity. Nurs Res 1986;35:382-5. [Crossref] [PubMed]

- Polit DF, Beck CT. The content validity index: Are you sure you know what's being reported? Critique and recommendations. Res Nurs Health 2006;29:489-97. [Crossref] [PubMed]

- Xiao C, Hanlon A, Zhang Q, et al. Risk factors for clinician-reported symptom clusters in patients with advanced head and neck cancer in a phase 3 randomized clinical trial: RTOG 0129. Cancer 2014;120:848-54. [Crossref] [PubMed]

- Eickmeyer SM, Walczak CK, Myers KB, et al. Quality of life, shoulder range of motion, and spinal accessory nerve status in 5-year survivors of head and neck cancer. PM R 2014;6:1073-80. [Crossref] [PubMed]

- Murphy BA, Dietrich MS, Wells N, et al. Reliability and validity of the Vanderbilt Head and Neck Symptom Survey: A tool to assess symptom burden in patients treated with chemoradiation. Head Neck 2010;32:26-37. [PubMed]

- Sandelowski M. Sample size in qualitative research. Res Nurs Health 1995;18:179-83. [Crossref] [PubMed]

- The Drupal Association. DrupalTM. 2019. Available online: https://www.drupal.org

- Nielsen Norman Group. Usability 101: Introduction to usability. Available online: , accessed June 8 2019.https://www.nngroup.com/articles/usability-101-introduction-to-usability/

- Jaspers MW, Steen T, van den Bos C, et al. The think aloud method: A guide to user interface design. Int J Med Inform 2004;73:781-95. [Crossref] [PubMed]

- Fu MR, Axelrod D, Guth AA, et al. Usability and feasibility of health IT interventions to enhance Self-Care for Lymphedema Symptom Management in breast cancer survivors. Internet Interv 2016;5:56-64. [Crossref] [PubMed]

- Fonteyn ME, Grobe SJ. Expert nurses’ clinical reasoning under uncertainty: Representation, structure, and process. Proc Annu Symp Comput Appl Med Care 1992;1992:405-9. [PubMed]

- Mendoza TR, Dueck AC, Bennett AV, et al. Evaluation of different recall periods for the US National Cancer Institute's PRO-CTCAE. Clin Trials 2017;14:255-63. [Crossref] [PubMed]

- Baker K, Barsamian J, Leone D, et al. Routine dyspnea assessment on unit admission. Am J Nurs 2013;113:42-9. [Crossref] [PubMed]

- Kuder GF, Richardson MW. The theory of the estimation of test reliability. Psychometrika 1937;2:151-60. [Crossref]

- Davis FD. Perceived usefulness, perceived ease of use, and user acceptance of information technology. MIS Quarterly 1989;13:319-40. [Crossref]

- Davis FD, Bagozzi RP, Warshaw PR. User acceptance of computer technology: A comparison of two theoretical models. Management Science 1989;35:982-1003. [Crossref]

- Lewis JR. IBM computer usability satisfaction questionnaires: Psychometric evaluation and instructions for use. International Journal of Human-Computer Interaction 1995;7:57-78. [Crossref]

- Aaronson NK, Ahmedzai S, Bergman B, et al. The European Organization for Research and Treatment of Cancer QLQ-C30: A quality-of-life instrument for use in international clinical trials in oncology. J Natl Cancer Inst 1993;85:365-76. [Crossref] [PubMed]

- Hsieh HF, Shannon SE. Three approaches to qualitative content analysis. Qual Health Res 2005;15:1277-88. [Crossref] [PubMed]

- Falchook AD, Green R, Knowles ME, et al. Comparison of patient- and practitioner-reported toxic effects associated with chemoradiotherapy for head and neck cancer. JAMA Otolaryngol Head Neck Surg 2016;142:517-23. [Crossref] [PubMed]

- Basch E, Deal AM, Dueck AC, et al. Overall survival results of a trial assessing patient-reported outcomes for symptom monitoring during routine cancer treatment. JAMA 2017;318:197-8. [Crossref] [PubMed]

- Basch E, Deal AM, Kris MG, et al. Symptom monitoring with patient-reported outcomes during routine cancer treatment: A randomized controlled trial. J Clin Oncol 2016;34:557-65. [Crossref] [PubMed]

- Parikh RB, Schwartz JS, Navathe AS. Beyond genes and molecules - A precision delivery initiative for precision medicine. N Engl J Med 2017;376:1609-12. [Crossref] [PubMed]

Cite this article as: Van Cleave JH, Fu MR, Bennett AV, Persky MS, Li Z, Jacobson A, Hu KS, Most A, Concert C, Kamberi M, Mojica J, Peyser A, Riccobene A, Tran A, Persky MJ, Savitski J, Liang E, Egleston BL. The development, usability, and reliability of the Electronic Patient Visit Assessment (ePVA) for head and neck cancer. mHealth 2019;5:21.