Perceptions on using interactive voice response surveys for non-communicable disease risk factors in Uganda: a qualitative exploration

Introduction

Non-communicable diseases (NCDs) are increasing worldwide and their prevention and control need to be scaled up (1). In 2016, the highest risk of dying from NCDs was in sub-Saharan Africa (2).

NCDs contributed 33% of all the deaths in Uganda in 2016 according to WHO (3). Uganda has prioritized establishing functional surveillance to support NCD prevention and control as one of the key intervention areas within the Health Sector Development Plan 2015/16–2019/20 (4).

WHO recommends surveys to assess risk factors for NCDs using the Stepwise Approach to Surveillance (STEPS) (5). This uses face-to-face data collection where the enumerators visit the respondents and collect data using a questionnaire but also conduct physical measurements and collect blood samples for testing parameters like blood sugar levels. Uganda conducted the STEPS survey in 2014 (6) but due to the high costs, a similar survey could take place after five years. Effective surveillance for NCD risk factors would need a more frequent data collection mechanism to augment STEPS data (7).

High income countries have used interactive voice response (IVR) in screening for alcohol use and management of alcoholism (8,9). IVR is an automated system that interacts with the respondents, collects data and directs it to the recipient. With the increase in mobile phone usage in Uganda from 38 per 100 persons in 2010 to 58 in 2017 (10), mobile phones could be used to conduct more frequent additional surveys. Some studies in Uganda have already used mobile phones in patient management and maternal and new born care (11,12). There is less literature on using IVR to collect information on NCD or other public health risk factors (13). Therefore, the purpose of this study was to explore perceptions on using an IVR survey to collect information on NCD risk factors in Uganda.

Methods

Study area

The study was conducted between November 2016 and February 2017. Key informant interviews (KIIs) were conducted in Kampala, the capital city. Focus group discussions (FGDs) were conducted in Mukono district which lies 18 km east of Kampala and has a rural and urban population. Being near the capital city, Mukono district is well served with mobile phone network; but the populations share similar challenges as in the other areas in Uganda like lack of electricity grids in rural areas.

Study population

Key informants were purposively selected and were drawn from government officials implementing NCD programs (n=3), researchers who had conducted research in NCDs (n=5), and researchers who had used mobile phones in research (n=3). A list was generated by the investigators. The potential key informants were contacted by phone first and follow up was done by research assistants. Appointments were subsequently made as to when and where the interviews would be conducted.

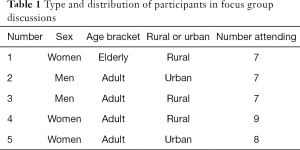

Participants for the FGDs were purposively selected by the local council leaders depending on the sex and age that was needed for that FGD. Different local council leaders were contacted by phone by the investigators. Each local council leader was requested to select participants who were not to be closely related. This was to enable participants to discuss freely their opinions which would not be possible among closely related relatives. FGDs included the elderly alone or the young adults alone; FGDs were homogenous by sex. Separating male from female participants in FGDs was to ensure that there was free sharing of information to mitigate the prevailing social and cultural tendency of some participants, especially female, of feeling less free to share their views in the presence of male counterparts. Only participants who were 18 years and above and were able to provide informed verbal consent attended the FGDs. Table 1 shows the distribution of respondents who participated in FGDs.

Full table

Data collection

Four research assistants, graduates in social sciences and had experience in qualitative data collection were recruited. Two were females and two were male. They were trained for three days in the data collection process during which they were introduced to study objectives, went through the data collection tools, did role plays, and received refresher training in research ethics. Two (a male and a female) were assigned to conduct KIIs while another two conducted FGDs. One would be a moderator and the other a note taker. Sessions were also audio recorded after getting consent from participants.

All KIIs were conducted in English. KIIs took place face-to-face in places where the respondents felt at ease mostly in their offices or any other place within their organizations. Seven of the eleven KIIs took between 30 and 40 minutes. The shortest KII took 14 minutes and the longest 69 minutes. KIIs were conducted using a key informant guide approved as part of the protocol. At the close of the interview, the interviewer would summarize the emerging issues and agree on them with the key informant.

FGDs were conducted in both rural and urban areas in Luganda, the local language in Mukono district. At the beginning of an FGD, participants were interviewed using a face-to-face survey where information about their demographics like age and sex were collected. FGDs were conducted at a public place, like a church or mosque, or at the compound of one of the members which was convenient to all the participants. FGDs took between 70–115 minutes long. FGDs were conducted using guides that had been approved as part of the protocol. The moderator and note taker would summarize at the end what they had noted down to the FGD participants and seek clarification where necessary.

Data management

KIIs were transcribed verbatim using note takers’ notes, audio recordings to keep the details and observations taken by the interviewer. FGDs were also transcribed verbatim in Luganda and later translated into English. Note takers’ notes and observations were also used in recording data from FGDs. Investigators who were conversant with the local language coded the texts and cross checked with the local language to ensure that the translations captured the content from the participants.

Data analysis

The transcribed text was read by two researchers and meaningful units identified. After a discussion, codes were generated and later merged into categories/themes using content analysis (14). The codes and categories were put into the QDA Miner 2.0.6 (Provalis Research, Montreal, Quebec, Canada), a qualitative analysis software. These codes and categories were used to analyse all the KIIs and FGDs and data was extracted as an excel spreadsheet. From the different categories, findings were extracted and are displayed with representative quotes.

Ethical considerations

The study was approved by the Johns Hopkins Bloomberg School of Public Health Institutional Review Board (USA), Makerere University Higher Degrees Research and Ethics Committee and the Uganda National Council for Science and Technology (Ref IS 128) and conforms to the provisions of Helsinki 2013. Participants were informed of the study objectives, possible risks, gave informed consent and were free to withdraw it any time. Data was anonymised. Authors are accountable for all aspects of the work.

Results

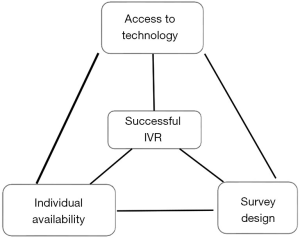

Three categories were identified: perceptions on individual ability and availability, challenges with the technological environment, and the design and implementation of the survey itself (Figure 1). These three categories or themes form a triad. All nodes need to function well to have a successful IVR.

Individual capacity and availability

Age

Respondents indicated that age influenced use of IVR. However, there was disagreement on whether being young would make one better able to take an IVR survey or not. Some FGD participants thought that teenagers may not be interested in taking an IVR survey on health.

- “If it is about health then the teenagers may not be interested about their health. But one who is twenty-five years old onwards would like to know about her health. She may wish to know how to take care of herself.” FGD rural woman.

Researchers thought that IVR was of interest to young people because it would preserve their privacy and they were likely to be knowledgeable in e-health and technology.

- “I think for young people and adolescents, this is very appealing because they spend a lot of time on phone and want to be in private. They may be uncomfortable with the face-to-face interview.” KII mobile phone researcher.

Phone literacy

Some people would be unable to participate in the IVR despite the availability of phones and networks because of lack of experience or literacy in digital technology.

- “There are people who don't know how to write and send a message. They do not understand what is written on buttons. They only know which button to press when receiving a call and when they want to call someone, they have to get another person to search for the number of the person they want to call from their phone books” FGD men urban.

Time of call

Timing of the IVR was critical. When people are working, it becomes difficult to respond.

- “If you are going to send IVR during office time, or working time, people may get interrupted.” KII mobile phone researcher.

- “When a person is not busy, he can accord you enough time like thirty minutes. However when you call when he is busy, he will give you less than thirty minutes.” FGD men urban.

Implementing the IVR could face challenges as some people may be called while doing jobs that involve using their hands which may be soiled. Using such hands to press different buttons on the phone would prove cumbersome.

- “If the man is digging and has soil on his hands and he is being told that; ‘choose from this, respond like this. If you choose this, press number one, if you choose that, press number two’, that person may not be able to do it because he is in the garden digging” FGD men rural.

Being averse to numbers not on one’s phone

Some FGDs noted that there were people who would only respond to telephone numbers which are saved within their phones. Such people would hesitate to respond to numbers which they did not know and if they did, they would ask many questions.

- “Some people have phones but the contacts they respond to quickly are the saved contacts in their phones. If you call and he doesn't have that contact phone, you take some time explaining yourself. Listening to a voice from a number that does not show in one’s phone contacts may be difficult for such people” FGD men rural.

- “For a person to listen to a voice which is not common or a person one doesn't know, I assure she will just switch off.” FGD women elderly rural.

Respondents differed on whether they would respond to missed calls from numbers they did not know. It was noted that some people were keen to respond to missed calls. However there were others who would not respond to such calls.

- “When I find a missed call and have some money, I buy airtime. I would like to know why that person took responsibility to call me. … I do not know that number but I try to find out why I was called.” FGD women elderly rural.

- “Now if you send pre-recorded voices, I would like to ask my colleagues here how do you receive it? Because personally if that voice comes I don't give it attention. … I first say, ‘Hello’, I hear someone talking… I just hang up because this person is wasting my time and I go ahead doing what I would be doing”. FGD men rural.

Gender issues

FGDs, especially those composed of women, indicated that IVR could bring misunderstandings between partners. Some husbands may want to know who is calling their wives at night and in some cases this may bring domestic violence. This was corroborated by some researchers.

- “A call at night could instigate beatings. If the voice is of a man, then it is too much.” FGD women urban.

- “I think you are aware of the situation where a lady gets a message and the husband wants to know where it comes from. It may be a risk for domestic violence.” KII mobile phone researcher.

Special needs

Researchers highlighted that the IVR would exclude certain populations with different abilities. Examples given were the deaf and the blind.

- “The other challenge is the marginalized populations. Some may not access these platforms, such as those who are deaf or blind.” KII mobile phones researcher.

Access to technology

Phone possession

Most respondents highlighted the challenges of not having mobile phones, not being in the network or phone batteries being down. Inability to get networks would affect more of the rural than the urban population.

- “Technology is good and can make it easy for you to get to people but you should know that most people in the village don’t have phones. The network is poor. That method will work in towns.” FGD women urban.

- “Some people cannot afford buying phones so if you want to deal with youth, they are going to miss out because of lacking phones.” KII mobile phone researcher.

- “If you are going to do a survey, there may be very old people or very young ones who don’t own their own mobile phones.” KII NCD policy maker.

Reliability of electricity

It was noted that some areas do not have an electricity grid. Some people may not have charged phones when their solar power runs down on a rainy day or when they are not able to take their phones to other places where they can charge them.

- “For us in this area, we use solar (power). If it rains, your phone can't charge the whole day, so it's better you call the following day.” FGD women elderly rural.

Network coverage

Respondents indicated that mobile network coverage is not universal in Uganda. Some people live in places with poor network coverage. Even those who live in places with good coverage sometimes go to places with poor coverage and if called at those times, they may not be able to respond.

- “We have issues concerning unreliable networks. How do you take that into consideration especially where you move to rural settings where you find that people have to climb trees looking for network?” KII NCD researcher.

The IVR system itself

Awareness of IVR

Being a relatively new technology, respondents felt that the community may not be fully aware of what an IVR survey is. All FGDs and KIIs stressed the need for sensitization of communities before the IVR survey is rolled out so that the people receiving calls are aware of the program.

- “If someone knows about it (IVR), he takes it seriously but if he doesn't, he takes it as another message he doesn't know about. If sensitisations are conducted and a person gets questions on the phone, he takes time to listen to them.” FGD women urban.

- “You need to sensitize people about these surveys before you actually start so that participants are sure that they are not talking to fraudsters and they know the importance of giving this information.” KII mobile phone researcher.

- “…they will have all sorts of funny misconceptions saying, ‘Are people trying to spy on us?’ You have to really prepare the population that there is going to be this kind of survey, we are going to use this kind of device”. KII NCD researcher.

Reducing costs of conducting research

Researchers indicated that the IVR could be less costly because it may remove some of the logistical expenses incurred in traditional surveys. One could even interview people who would be difficult to reach physically.

- “If you are using interactive voice response, you may sample more people at a reduced cost. This could improve the accuracy of the study if you get a bigger sample size. You would even be able to interview people in hard to reach areas where a vehicle couldn’t reach.” KII NCD policy maker.

Incentives

Divergent views came out on the issue of incentives. Some community members were of the view that incentives needed to be imbedded in the IVR program because people taking those calls would be missing to do other productive work.

- “Participants should get incentives because one could be going for work expecting some payment and is called back. Others could be in their gardens cultivating food! So I would suggest that let the participants be compensated for that time.” FGD women elderly rural.

Others were opposed to having any incentive because by answering questions, one would also be benefitting from the information. Giving information should be voluntary.

- “I think there is no need for it (incentives) because you benefit from the information asked and you learn.” FGD women rural.

Others were of the opinion that incentives could be given but should not be promised at the beginning of the interview or that they had to be given in order to get the interviews done. This view was shared by the NCD policy makers.

- “After responding to the survey, one could receive the incentive without being told prior that he was to receive the incentive. He shouldn’t think that he should respond to the survey so that he is given an incentive.” FGD men rural.

- “It makes sense to refund may be someone’s transport if they were called from somewhere or if they were found doing something and say well we are paying for their time but it shouldn’t be that because you are being interviewed you should be given an incentive.” KII NCD policy maker.

In terms of what to give as incentives, in addition to airtime credits or electronic cash transfers referred to in Uganda as ‘mobile money’, some participants indicated that the incentive could be in-kind e.g., some advice on how respondents should live healthy lives or a voucher which they can use to get faster services at a health facility.

- “I would think of airtime some of them would prefer mobile money. Some of them would benefit if in a survey you would say like maybe if you needed information on such and such, if you have a question regarding this disease there is a call back line which is free of charge where you will easily get the information you need without having to incur the cost.” KII NCD policy maker.

Some participants even indicated that if the respondents in the IVR survey are to be given incentives, then everybody should be given.

- “If they are to give incentives, then everyone should be given. It shouldn’t be a few and others are forgotten. It should be general.” FGD women urban.

Monetary incentives were seen as having the risk of leading to respondents’ potentially giving biased information and possibly cutting into research budgets.

- “I don’t agree with the word incentive, it’s not accepted because you are going to get biases, you are going to get answers according to whether you give an incentive or not. But if you are talking of reimbursing someone’s time spent taking the interview, that’s a different matter.” KII NCD researcher.

Timing

Most of the respondents indicated that the IVR should be sent a couple of times during the day to capture a large percentage of people because not all people have access to their own mobile phones all the time.

- “They should send messages three times. Let’s say some messages should be sent in the morning, some during the afternoon hours and some in the evening. This means that those who missed in the morning because the phone was somewhere being charged, will receive in the afternoon. Those who missed in the afternoon because they were having lunch or they were travelling will receive at seven o’clock, eight o’clock because they are now settled.” FGD men rural.

There were suggestions that the IVR survey should be given at specific times which would be communicated to the respondents ahead of time so that people would be ready to take the IVR survey when it comes.

- “You the callers should give us specific time like from 8:00 o’clock up to 11:00pm. The most important thing is having specific time that from such a time up to such a time there is a call.” FGD women rural.

Discussion

Perceptions of using IVR surveys to generate estimates for NCD risk factors revolve around individual factors, access to the required technology, and the way the survey is conducted. At individual level; age, phone literacy, occupation, being averse to numbers not on one’s phone, expected cost, special needs and gender could affect the response to an IVR survey. Phone possession, reliability of electricity and network coverage may also determine whether a person could respond at the time one is called on IVR. The system itself needs to be designed to sensitize people before the IVR survey. Participants need appropriate incentives. The IVR should be conducted when respondents are not engaged in other demanding activities.

Respondents in both FGDs and KIIs indicated that age was a critical factor to consider when sending out IVR surveys. Whereas community members thought young people may not use the IVR system, researchers were of the view that young people would use IVR since they were more familiar with mobile technology and spend a lot of time on their mobile phones. However, it was noted that the cost of having mobile phones would be prohibitive for the very young as well as the very old. During the Uganda National Housing and Population Census 2014, the age group 0–14 years contributed 47.9% of the population and those aged 10–24 years were 34.8% of the population (15). Any population-based intervention needs to prioritize the young population in those countries where they constitute the largest proportion. Unlike in Bangladesh where a higher proportion of young people (below 30 years) owned a phone (16), studies done in Uganda have shown lower phone ownership among the youth. A study done in 2011 found about 47% of the youth in Kampala owned and used mobile phones daily (17). A study done in neighbouring Kenya in 2009 also indicated lower phone ownership among the young ones and rural areas (18). While mobile phone ownership may not be a hindrance to conduct public health surveys in high income countries (19), ownership in low income countries may not be fairly distributed across the age groups. Despite the increase in the mobile phone ownership over the years, these variations of ownership according to age may take long to level out. It may be necessary to continue re-assessing phone ownership among young people when a survey is to take place to ensure a proportional representation from the youth is included, such as with the use of quota sampling (20).

Rural areas were highlighted as disadvantageous in terms of having fewer people with phones, having unreliable networks and not being connected to the electricity grid. Rural areas in Kenya had less proportions of people owning phones (39% in rural compared to 58% in urban) (18). A study done in rural Uganda found about 63% of the patients having phones (11) while the one in Nigeria found that about 25% of those who responded to the calls were not owners (21). Network and power malfunctions have been reported in Ethiopia as having been a challenge for IVR implementation (22). Special efforts need to be imbedded in nationally delivered IVR surveys to ensure that a representative proportion of the respondents are from rural areas so as not to skew findings to urban areas.

Participants indicated that the population needs to be sensitized before the survey takes place. Community sensitizations to support public health interventions have been found effective in Kenya and Cameroon and The Gambia (23-25). Among households in Uganda, the greatest percentage receives information from radio and word of mouth (15). Within the context therefore, community sensitizations on radio could enhance the response rate for IVR. Sensitization would also reduce those people who would not be willing to respond to anonymous calls.

There were diverse views on using incentives in IVR. Some community members wanted participants to be given incentives although other members were against it. Researchers and to a certain extent the policy makers were also against giving incentives unless if it was compensating respondents for their time. It was agreed that if incentives were to be given, they should not be in cash and should not be a pre-requisite for participating in an IVR survey. From a researcher’s perspective, incentives could increase participation but could also cut into the budgets for research as well as be prone to getting biased information. Usefulness of incentives in increasing participation rates have been explored in public health interventions (26). However, the quality of evidence to increase interventions like in child health has been found to be low (27). Ethical concerns on financial incentives also need to be studied (28). Whereas incentives could still play a role in certain circumstances, the focus for IVR should be to increase representativeness and validity of the data and if this could be achieved with less incentives, that would be good for the science as well as the research costs.

Most participants preferred IVR calls to be made multiple times to cater for those who would be out of the network, doing work which may not enable them to pick phones or be busy and unable to hold phones. Different studies have shown consumers wanting messages to be sent multiple times in the day or at specific times so that they can be able to access them (29,30). Whereas SMS messages could still be read even when sent at times when participants are busy, an IVR has to be sent at a time when the respondent can pick up the phone and go through the questions.

Participants highlighted possible benefits of having lower costs in using IVR compared to traditional face-to-face methods since they would be able to reach populations which normally would not be accessible. Other studies had also indicated lower maintenance costs in doing IVR studies (31-33) although start-up costs may be high. Reduced maintenance costs would be a strong rationale for having IVR mechanisms to generate prevalence of risk factors for NCDs.

A concern about the risk of domestic violence arising from the responding to IVR was also expressed by some female FGDs. Domestic violence in Uganda has been noted to be bidirectional: from men to women as well as from women to men. In the Uganda Demographic and Health Survey 2006, 59% of the ever-married women were said to have ever experienced physical or sexual violence from their husband or partner while 24% of the ever married men had ever experienced physical or sexual violence from their wife or partner (34). Gender disparity in responding to IVR is a phenomenon that could be investigated in further research.

While face-to-face interviews are able to be conducted with people who are blind or deaf, the IVR needs to use special technology to address the needs of such people. Concerns were raised that when given in one format, the IVR could miss out on the blind and deaf. Technology has advanced to support such people with a touch phone for the blind (35). IVR surveys need to be inclusive and survey implementers should offer options for the deaf and the blind.

Study limitations

This was a qualitative exploration and hence could not quantify how certain limitations or opportunities would work against or for IVR. A quantitative study on appropriate timing and incentives would establish what level of incentive could be tolerated in this community and which could be the best timing. However, the use of community members and key informants from both research and NCD policy makers helped to triangulate perceptions of communities with those researchers. The second limitation is that the FGD respondents had not participated in an actual IVR survey. After participation, perceptions may change as they would now know how it operates. However, these views are still pertinent as they help to shape initial interventions with IVR. FGD participants were drawn from the areas in or near Kampala, the capital city and their views or perceptions may not necessarily represent the broader Ugandan population.

Conclusions

IVR data collection is a promising mechanism since increasingly, many people possess and use mobile phones. Coupled with sensitization and correct timing of the calls, many people may participate. However challenges remain as the very young and old, the disabled, those with low technology literacy and those located in rural areas may not easily participate in IVR surveys.

Acknowledgments

We are grateful to our research teams who developed the initial versions, translated survey instruments, collected data, made audio recordings and conducted field work as well as all respondents.

Funding: This work was supported by the Bloomberg Philanthropies under the Bloomberg Philanthropies Data for Health Initiative (Grant No. 119668). We thank our collaborators in the NCD component at the Centers for Disease Control and Prevention (CDC), CDC Foundation, the World Health Organization.

References

- Luciani S, Hennis A. Commentary: setting priorities in NCD prevention and control. Cost Eff Resour Alloc 2018;16:53. [Crossref] [PubMed]

- collaborators NCDC. NCD Countdown 2030: worldwide trends in non-communicable disease mortality and progress towards Sustainable Development Goal target 3.4. Lancet 2018;392:1072-88. [Crossref] [PubMed]

- World Health Organization. Uganda, Noncommunicable Diseases (NCD) Country Profiles, retrieved March 9, 2019. Available online: https://www.who.int/nmh/countries/uga_en.pdf. 2016.

- Government of Uganda. Health Sector Development Plan 2015/16 - 2019/20. Kampala: Ministry of Health; 2015.

- Organization. WH. STEPS: A framework for surveillance. Retrieved March 9, 2019. Available online: http://www.who.int/ncd_surveillance/en/steps_framework_dec03.pdf. 2003.

- Ministry of Health. Non-Communicable Disease Risk Factor Baseline Survey: UGANDA 2014 REPORT. Kampala2014.

- Pariyo GW, Wosu AC, Gibson DG, et al. Moving the Agenda on Noncommunicable Diseases: Policy Implications of Mobile Phone Surveys in Low and Middle-Income Countries. J Med Internet Res 2017;19:e115. [Crossref] [PubMed]

- Rose GL, Badger GJ, Skelly JM, et al. A Randomized Controlled Trial of IVR-Based Alcohol Brief Intervention to Promote Patient-Provider Communication in Primary Care. J Gen Intern Med 2016;31:996-1003. [Crossref] [PubMed]

- Sinadinovic K, Wennberg P, Berman AH. Population screening of risky alcohol and drug use via Internet and Interactive Voice Response (IVR): a feasibility and psychometric study in a random sample. Drug Alcohol Depend 2011;114:55-60. [Crossref] [PubMed]

- Bank. TW. Mobile cellular subscriptions (per 100 people). Retrieved March 9, 2019, from The World Bank: Available online: http://data.worldbank.org/indicator/IT.CEL.SETS.P2. 2019.

- Kim J, Zhang W, Nyonyitono M, et al. Feasibility and acceptability of mobile phone short message service as a support for patients receiving antiretroviral therapy in rural Uganda: a cross-sectional study. J Int AIDS Soc 2015;18:20311. [Crossref] [PubMed]

- Mangwi Ayiasi R, Atuyambe LM, Kiguli J, et al. Use of mobile phone consultations during home visits by Community Health Workers for maternal and newborn care: community experiences from Masindi and Kiryandongo districts, Uganda. BMC Public Health 2015;15:560. [Crossref] [PubMed]

- Gibson DG, Pereira A, Farrenkopf BA, et al. Mobile Phone Surveys for Collecting Population-Level Estimates in Low- and Middle-Income Countries: A Literature Review. J Med Internet Res 2017;19:e139. [Crossref] [PubMed]

- Graneheim UH, Lundman B. Qualitative content analysis in nursing research: concepts, procedures and measures to achieve trustworthiness. Nurse Educ Today 2004;24:105-12. [Crossref] [PubMed]

- Uganda Bureau of Statistics. The National Population and Housing Census 2014 – Main Report. Kampala, Uganda: Government of Uganda, 2016.

- Rahman MS, Hanifi S, Khatun F, et al. Knowledge, attitudes and intention regarding mHealth in generation Y: evidence from a population based cross sectional study in Chakaria, Bangladesh. BMJ Open 2017;7:e016217. [Crossref] [PubMed]

- Swahn MH, Braunstein S, Kasirye R. Demographic and psychosocial characteristics of mobile phone ownership and usage among youth living in the slums of Kampala, Uganda. West J Emerg Med 2014;15:600-3. [Crossref] [PubMed]

- Wesolowski A, Eagle N, Noor AM, et al. Heterogeneous mobile phone ownership and usage patterns in Kenya. PLoS One 2012;7:e35319. [Crossref] [PubMed]

- Harvey EJ, Rubin LF, Smiley SL, et al. Mobile Phone Ownership Is Not a Serious Barrier to Participation in Studies: Descriptive Study. JMIR Mhealth Uhealth 2018;6:e21. [Crossref] [PubMed]

- Labrique A, Blynn E, Ahmed S, et al. Health Surveys Using Mobile Phones in Developing Countries: Automated Active Strata Monitoring and Other Statistical Considerations for Improving Precision and Reducing Biases. J Med Internet Res 2017;19:e121. [Crossref] [PubMed]

- Menson WNA, Olawepo JO, Bruno T, et al. Reliability of Self-Reported Mobile Phone Ownership in Rural North-Central Nigeria: Cross-Sectional Study. JMIR Mhealth Uhealth 2018;6:e50. [Crossref] [PubMed]

- Daftary A, Hirsch-Moverman Y, Kassie GM, et al. A Qualitative Evaluation of the Acceptability of an Interactive Voice Response System to Enhance Adherence to Isoniazid Preventive Therapy Among People Living with HIV in Ethiopia. AIDS Behav 2017;21:3057-67. [Crossref] [PubMed]

- Njomo DW, Masaku J, Mwende F, et al. Local stakeholders' perceptions of community sensitization for school-based deworming programme in Kenya. Trop Dis Travel Med Vaccines 2017;3:15. [Crossref] [PubMed]

- Dierickx S, O'Neill S, Gryseels C, et al. Community sensitization and decision-making for trial participation: A mixed-methods study from The Gambia. Dev World Bioeth 2018;18:406-19. [Crossref] [PubMed]

- Wamai RG, Ayissi CA, Oduwo GO, et al. Assessing the effectiveness of a community-based sensitization strategy in creating awareness about HPV, cervical cancer and HPV vaccine among parents in North West Cameroon. J Community Health 2012;37:917-26. [Crossref] [PubMed]

- Lindsay JA, Minard CG, Hudson S, et al. Using prize-based incentives to enhance daily interactive voice response (IVR) compliance: a feasibility study. J Subst Abuse Treat 2014;46:74-7. [Crossref] [PubMed]

- Bassani DG, Arora P, Wazny K, et al. Financial incentives and coverage of child health interventions: a systematic review and meta-analysis. BMC Public Health 2013;13 Suppl 3:S30. [Crossref] [PubMed]

- Lunze K, Paasche-Orlow MK. Financial incentives for healthy behavior: ethical safeguards for behavioral economics. Am J Prev Med 2013;44:659-65. [Crossref] [PubMed]

- Laidlaw R, Dixon D, Morse T, et al. Using participatory methods to design an mHealth intervention for a low income country, a case study in Chikwawa, Malawi. BMC Med Inform Decis Mak 2017;17:98. [Crossref] [PubMed]

- Lyzwinski LN, Caffery LJ, Bambling M, et al. Consumer perspectives on mHealth for weight loss: a review of qualitative studies. J Telemed Telecare 2018;24:290-302. [Crossref] [PubMed]

- Falconi M, Johnston S, Hogg W. A scoping review to explore the suitability of interactive voice response to conduct automated performance measurement of the patient's experience in primary care. Prim Health Care Res Dev 2016;17:209-25. [Crossref] [PubMed]

- Andersson C, Danielsson S, Silfverberg-Dymling G, et al. Evaluation of Interactive Voice Response (IVR) and postal survey in follow-up of children and adolescents discharged from psychiatric outpatient treatment: a randomized controlled trial. Springerplus 2014;3:77. [Crossref] [PubMed]

- David P, Buckworth J, Pennell ML, et al. A walking intervention for postmenopausal women using mobile phones and Interactive Voice Response. J Telemed Telecare 2012;18:20-5. [Crossref] [PubMed]

- Uganda Bureau of Statistics (UBOS) and ORC Macro. Uganda Demographic and Health Survey 2006. Calverton, Maryland, USA: UBOS and ORC Macro, 2007.

- Yong R. "VisionTouch Phone" for the Blind. Malays J Med Sci 2013;20:1-4. [PubMed]

Cite this article as: Rutebemberwa E, Namutundu J, Gibson DG, Labrique AB, Ali J, Pariyo GW, Hyder AA. Perceptions on using interactive voice response surveys for non-communicable disease risk factors in Uganda: a qualitative exploration. mHealth 2019;5:32.