Technology-driven methodologies to collect qualitative data among youth to inform HIV prevention and care interventions

Introduction

Technology-mediated interventions and mHealth (i.e., the use of mobile platforms for medical and public health-supported interventions) provide an opportunity to reach those who experience multiple barriers to HIV prevention services (1-5). mHealth HIV interventions are becoming increasingly popular and include the use of mobile text messaging and mobile phone applications (apps), often integrating counseling techniques through telehealth or text-based messaging (6-9). In one review, Muessig et al. [2013] identified 55 unique mobile apps that address HIV prevention or care; however, these apps were not frequently downloaded or highly rated by their users (10). Even though mobile apps for HIV intervention are a popular platform for developers, it can often be challenging to encourage app users to download these apps and use them regularly. Muessig et al. [2013] suggested that prior to building an app, developers should involve the community of interest in an iterative data collection process to obtain input from the target audience about app preferences and app evaluation (10).

Involving communities in the development of mHealth apps or online interventions can avoid a common disconnect that may occur: too often the research environment fails to incorporate the voices of the community, potentially reducing the use and effectiveness of the intervention (11). Thus, community-centered approaches to mHealth intervention development can help address the potential gap between science and practice by ensuring that interventions are culturally appropriate and driven by community needs and desires. Community voices are often best included in formative research phases, during which researchers collect data from communities of interest as an integral component in the development of culturally appropriate interventions (12). This helps to ensure that interventions are developed from community-identified needs (13,14). Ultimately, this iterative research process that centers a community’s needs and preferences may increase the uptake, adherence, and ultimate effectiveness of mHealth interventions for HIV prevention (10).

However, common approaches to gaining community input often rely on qualitative data gathered through in-person formats, for example focus group discussions (FGD) and in-depth interviews (IDI). Focus groups are typically used for generating information on participants views, opinions, and values in a collective context while IDIs (both structured and unstructured) aim to understand the unique perspectives, experiences, beliefs and motivations at the individual level (15-17). Additionally, youth advisory boards (YABs) can be seen as a special type of participatory and community-centered focus group conducted with youth on an ongoing basis (18). While these proven methodologies have numerous scientific strengths, they may be of limited utility for hearing the voices of communities who already struggle with physical, economic or cultural access to services. If communities experience barriers to accessing services, the same barriers may prevent them from being involved in face-to-face data collection methods. In particular, youth engagement can be limited by structural barriers (e.g., lack of transportation, inconvenient timing) and reluctance to participate due to stigma or discomfort with group settings or in-person, one-on-one interactions (19). This results in a number of biases that limit the quality of face-to-face qualitative data collection, i.e., social desirability bias or selection biases created by differential likelihood of recruitment and attendance (20).

With the advancement of technology, researchers have been able to adapt in-person data collection methods to the online environment and overcome some of the limitations of traditional in-person methods (21-23). The use of online methods has almost exclusively involved using the same techniques in a digital space: that is, conducting FGD or IDI online. However, digital environments offer a range of possibilities for innovative data collection techniques. In this paper, we describe a range of qualitative data collection techniques that can be used via online platforms to collect qualitative data to guide youth-centered interventions, and we outline their relative advantages and disadvantages to online versus face-to-face FGD or IDI. We then use four case studies to highlight the methodologies and findings. We conclude with a series of recommendations for researchers considering online formative qualitative data collection.

Online focus groups

Focus groups are usually conducted in face-to-face group settings; however, online focus groups are increasingly used with adolescents and young adults (24). During an online focus group, a moderator poses a question in audio, written, or video format using a web-based platform (e.g., FocusGroupIt). Group members then have the opportunity to respond and interact with one another through either talking or typing their replies (25). There are two general types of online focus group methodologies: asynchronous and synchronous. In asynchronous focus groups, a moderator posts a question and participants have the opportunity to respond at their own pace such that all participants are not required to be online at the same time. Synchronous focus groups are typically conducted with a group of participants who log on for a given amount of time and communicate in real time with one another through chat rooms or, more recently, web conferencing technology (26).

Case study #1: test rehearsal asynchronous online focus groups

Test Rehearsal is an online intervention designed to increase HIV self-testing for young men who have sex with men (YMSM) and transgender youth (aged 14–19). The intervention was designed to provide YMSM and transgender youth with a virtual HIV self-testing experience through the use of avatars, animations and a play-through story design. To inform development, two online asynchronous focus groups were conducted to elicit youth perspectives around barriers and facilitators to HIV testing, perceptions of testing, in general, and self- testing specifically, including discussions around participants’ perceived ability and strategies to cope with a positive diagnosis outside a clinical setting.

Between September through December 2016, ten youth (mean age: 17.7 years) were recruited through posts on LGBTQI Facebook groups, Tumblrs, and via emails to nationwide LGBTQI organizations and local university campus-based LGBTQI listservs to participate in two asynchronous focus groups. Groups were conducted using a password protected Wordpress forum site designed specifically for the study. Youth were advised to choose an anonymous profile name that could not be linked to their real name or other online usernames. A moderator from the research team posted questions on the forum twice each day, in the morning and afternoon, for a total of 3 days. Participants were instructed to visit the board at least twice a day to respond to moderator questions and interact in discussions with other group members.

Participation was high with all participants responding at least once per day over the course of the 3-day group with most (80%), responding to both daily posted questions. Groups were successful in generating important data to guide the translation of the self-testing scenario into digital content- including providing concrete recommendations for increasing self-testing and for ensuring the intervention included clear and explicit steps to engage in care if testing positive. Barriers to participation (e.g., 12 youth completed screening and consent but did not participate) and low engagement with racial/ethnic minority and transgender youth (e.g., 90% identified as gay, 70% were White) were identified as limitations.

Case study #2: iTech youth advisory council (YAC) synchronous online focus groups

The University of North Carolina/Emory Center for Innovative Technology (iTech) is a National Institutes of Health cooperative agreement as part of the Adolescent Medicine Trials Network for HIV/AIDS Interventions (ATN). iTech aims to impact the HIV epidemic by conducting innovative, interdisciplinary research on technology-based interventions across the HIV prevention and care continuum for adolescents and young adults in the United States (27). To ensure sustained youth involvement in iTech protocols, iTech uses online FGD methodologies to engage YAB members across eight geographically diverse iTech sites to participate in a monthly YAC meeting through video conferencing. Youth between the ages of 15–24 years of age were nominated by their local YABs to serve on the council. iTech’s YAC is a structured forum for solicitation of input regarding research priorities, protocol vetting, study implementation and discussion of research challenges for youth directly impacted by iTech studies. YAC meetings are also used as an opportunity for education, training and discussion of results and strategies for dissemination of research findings. Youth across the country call in via videoconference using ZOOM, a HIPAA-compliant cloud-hosted service that allows youth to video-call into meetings without creating a login or having to download an app. The meetings are planned and facilitated by two iTech staff members in the same age range with expertise in youth engagement. Staff members take notes on the content of the discussions. Youth can access ZOOM from their phones, tablets or computers.

YAC meetings began in June of 2017 and have been held consistently to date. The YAC has provided feedback on all 11 iTech protocols. Each month, five key takeaways from the meeting are posted on the iTech website (see Figure 1). Meetings conducted using videoconferencing represents a low-cost means to engage youth from diverse geographic locations thereby enhancing the research teams’ ability to gain meaningful input on an ongoing basis. YAC members have reported that the convenience of having videoconference meetings allows them to stay engaged even if they are busy. Members have been able to build rapport with one another and with iTech staff due to having: a consistent meeting schedule, a group text chat, and by being able to catch up with one another and socialize at the end of the call.

Crowdsourcing

Crowdsourcing involves a group of people completing a task through an open call and answering questions. Crowdsourcing rests on the premise that community members who work together collaboratively will be more efficient and effective than any one individual (28). For more than a decade, there has been an increase in the use of crowdsourcing within the private sector (29). More recently, crowdsourcing has been used with adolescents and young adults (30). While a number of crowdsourcing approaches exist, open contests are the most common. Contests often occur on online platforms and are a form of crowdsourcing whereby contributions are generated from an audience to help solve a problem, entries are judged by a panel of experts and finalists are celebrated with a prize and/or recognition (31). Online crowdsourcing, which can be conducted anonymously, has been used by UNAIDS to engage nearly 3,500 youth from 79 countries in decision making for HIV programming and policies and has been used for the adaptation, development, and implementation of numerous other health interventions including those for MSM (32,33). A recent scoping review concluded that crowdsourcing can be an effective tool for informing the design and implementation of HIV and sexual health interventions (34).

Case study #3: tough talks crowdsourced online contest

Tough Talks is an online intervention focused on enabling YMSM to practice disclosing their HIV serostatus to sex partners through the use of Artificial Intelligence (AI) driven role-playing scenarios (35). To provide authentic and realistic disclosure dialogues including in the intervention, we solicited online submissions from YMSM in the form of comic book dialogues. An online submission platform was developed which included eight comic book panels, each with some introductory text (story starters) to provide context for and initiate a disclosure dialogue. For example, one panel began with the following: “WTF text: You’ve hooked up with this guy a couple times, usually when you see him out. It’s very casual. You receive a text from him. “Hey. My friend just told me you’re poz. WTF. Why didn’t you tell me???” Participants were advised to fill in the rest of the disclosure conversation based on their own or others’ personal experiences. A panel with no introductory text was also included to encourage original submissions. The contest ran for 8 weeks and was advertised on social media (e.g., Facebook, Grindr, Jack’d) to ensure a diverse set of entries. Eligibility criteria included: (I) age 16–29 years; (II) assigned male sex at birth; (III) identify as a man who has sex with other men; (IV) owns a mobile device or has access to a laptop or desktop computer; and (V) able to understand, read, and speak English. The website provided specific submission instructions, including entry eligibility (e.g., must include intelligible dialogue) and a brief tutorial on the submission process. Multiple entries were allowed and encouraged. A total of 53 submissions were received (Figure 2 for example). Overall, there were 16 daily winners, four weekly winners and one grand prize winner (as judged by the study team and members of the iTech YAC). User-created dialogue and utterances from the contest were incorporated into the AI-driven disclosure conversations, ultimately creating more realistic scenarios for role-playing within the Tough Talks intervention.

Participant-empowered visual timelines

Participant-empowered data collection methods often include the production of visual representations of a social or health problem or use cues to guide the interview process (e.g., body mapping, photo voice) (36-38). Visualization activities are often accompanied by a series of prompts or guidelines from the researcher; however, the participant is empowered to create their own visual representation of the data, which allows for active engagement in the social or health problem (36). Participants control the visual representation of their narrative. Interviewers can use the visual representation to ask the participant to provide more depth: for example, asking the participant to look closely at the image they have created and talk about patterns they may see in their behavior. The creation of visual aids can be useful in providing more depth and context beyond an individual’s spoken or written words (39,40). Typically, visual aids in qualitative research have included drawings, photography, and film (36,41). Visual timelines or life-history calendars are a promising means of creating visual cues to help increase autobiographical memory (42) and enhance the complexity and nuances of the data (36). Visual timelines are typically presented in the form of a calendar with cues for important domains or timing of events to visually understand the sequence of events over a given period of time (42).

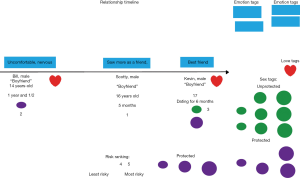

Case study #4: we prevent visualization timelines

“We Prevent” is an iTech ATN project that seeks to develop and pilot a relationship skills-building HIV prevention intervention for YMSM and their male partners (43). The first phase of the project involved IDIs to refine the intervention content. All IDIs were conducted online through HIPAA secure video chat software. During the IDIs, participants created a visual relationship timeline using virtual stickers to develop an overview of their recent dating and relationship history (36,43). The IDI followed a structured process whereby participants placed stickers on the timeline in response to questions about their feelings, desires, and communication. Participants added non-identifying nicknames for up to 5 “sexual and/or romantic partners” who were “significant or memorable;” however, participants defined “significant or memorable” for themselves. Participants were guided through their relationship history and answered questions about each of their relationships by applying stickers with predetermined labels to the timelines. For example, participants use “relationship tag” stickers with definitions (e.g., boyfriend, friend with benefits) and “emotion tag” stickers for each partner (e.g., trust, disrespected). The interviewer then asks follow-up questions about why certain terms were chosen and inquires about comparisons between relationships.

Between June and November 2018, 30 young men were recruited through social media (e.g., Facebook, Instagram) and completed online interviews. Participants ranged in age from 15 to 19 (mean age: 17.81) and 48% identified as people of color. Most of the young men identified as gay (83%) or bisexual (13%), and, had been with their partner for less than six months (55%). Participants reported no difficulties in conducting the timeline process, and the opportunity to create a visual representation of their relationships was universally met with enthusiasm by participants (see Figure 3 for example). The relationship timelines were useful in facilitating conversations around relationship characteristics and challenges. Central themes emerging from the timeline process included a lack of communication or implicit assumptions many young men had around sexual agreements (i.e., the rules for permitting sex with other partners), discussing sexual boundaries and consent, the added strain on relationships due to stigma and family rejection, and developmental changes in relationship and personal goals (e.g., future goals and expectations surrounding autonomy and independence could hinder relationship commitment). These themes emerged in several ways enabled by the timeline process. First, participants could write issues onto the timeline and tag them to their relationships. Second, participants could reflect on the timeline they created and talk through patterns they observed (e.g., lack of communication with partners) or observe consistent gaps (e.g., concerns over boundary setting in relationships). Consistent with prior studies (36), the visual timelines added a deeper understanding of the relationship and HIV prevention needs of YMSM while easing participant discomfort with sensitive topics. The timeline process intentionally used visual cues that were discrete and allowed participants to describe their relationships and sexual lives on their own terms.

Discussion

Beyond facilitating intervention delivery, technology can serve as a platform for community-centered formative data collection, mitigating the limitations of in-person methods, and potentially increasing access to diverse, and geographically dispersed youth. Collecting formative data online also ensures that researchers are collecting data in the same space, and among the same potential users, as interventions will be delivered. There are numerous advantages to each of these online qualitative methodologies. First, online FGDs and IDIs do not require finding and paying for a physical setting for the research to take place, saving researchers and participants time and money (44). Second, online platforms that are text-only, such as asynchronous FGDs do not require hiring transcribers and individuals to double-check for accuracy since the transcripts are produced by participants as they type (45). Third, online qualitative data collection methods have the potential to engage participants from diverse geographical regions, given the high levels of internet use in the United States (46). Fourth, participants may be more likely to disclose “sensitive topics” given the anonymity and convenience of the online platform (47). Web-conferencing software can also provide participants the ability to turn off their camera if they feel any discomfort with the sensitive topics: they may be more comfortable speaking or writing their stories if they are not being observed. From participants’ perspective, the reported advantages include being able to participate at a time and location most convenient to them, more time to think about their feelings before crafting a response (in asynchronous methods), and some people prefer to express themselves in writing (44,48). Studies have found that the quality of data in online qualitative methods, specifically online FGDs to be comparable to face-to-face methods (49), and produce rich data (50), and high levels of group interactivity (51).

Despite the many advantages, there are some notable challenges to conducting online qualitative data collection. Each of these methodologies requires access to the internet and a certain degree of computer literacy. Participants may have difficulty logging on and some participants may be low in technology literacy or have limited access to a computer or smart phone. It may also be challenging to build rapport or to follow visual cues using these platforms. For some participants, the anonymity provided by online methodologies may actually serve as a barrier to being able to fully engage and some participants may desire physical contact to ease rapport building that comes from being in the same physical space as someone else. Researchers have engaged in a number of strategies to overcome challenges with access to the internet and computer literacy, such as partnering with healthcare organizations and community agencies to provide internet access (27).

There are also particular challenges for the different online methods described above. In online FGDs, specifically asynchronous focus groups, researchers may miss non-verbal cues that may lead participants and the researcher to misunderstand one another or lose the nuances that are conveyed through body language, limiting the moderator’s ability to ask follow-up questions (52,53). Although prior research has found comparable data generated from online and in-person FGDs (54), other research has shown that participants may contribute shorter comments and write fewer words in online FGDs as compared to conducted in-person (55). An online platform can also negatively impact the group dynamic (22,25) since some participants may be less engaged and there may be more time between responses to prompts (56). Participants also have more time to carefully construct their responses or edit their responses to a question or prompt, which may obscure the richness of an in-person discussion (46). Heated exchanges and rapid topic changes can make it difficult for a participant to follow (25,57). To overcome these concerns, it is critical that the moderator set and present ground rules to participants and closely monitor the questions and responses (25,57). There may also be more dropouts either before beginning the group or during and people may sign up but not attend (58), as was seen in Test Rehearsal and have been reported in the past (59,60). However, this issue is not universal with online FGDs. For example, Dubois et al. [2015] (61) was able to successfully enroll 80 adolescent males 14-18 years of age who, identified as gay, bisexual, or queer into 4 asynchronous focus groups. Among the 80 participants enrolled, 75 (94%) logged in and participated at least once/day over the course of the 3-day group; 64 (80%) responded to at least one question in all 6 sessions (61).

Crowdsourcing contests have similar limitations to other online modalities. Most notably, participants need to have higher literacy levels and access to computers, phones, or tablets with internet capability to enter their materials for the contest. Crowdsourcing contests require ensuring that responses to contests are from the specific demographic groups of interest (30). This may be a challenge when contestants may not want to disclose sensitive information about themselves (e.g., HIV status). It can also be difficult to identify which unique user accessed contest materials across different sites (30). For example, a person may visit Twitter and Instagram with different usernames and this would count as two unique users rather than one user. Some projects have hosted in-person events where individuals can submit entries and/or mail their entries (33). Thus, there may be a need to allow for a combination of modalities for contest entries, as well as identify software programs that can identify unique users across different social media sites to accurately capture user engagement.

The use of visualization timelines in online IDIs has the potential to produce rich data and insights into a participant’s life, as was seen in We Prevent. There is more opportunity to build rapport during IDIs than other online modalities such as FGDs and crowdsourcing. However, visualization timelines are more time intensive such that they require the researcher to walk through the prompts and carefully attend to participants’ stories with follow-up prompts (36). The researcher needs to be thoroughly trained in the protocol to ensure that they can be flexible for participants who may have difficulty describing their life narrative. Prior research has shown that some participants are able to go through the timeline in a systematic manner whereas others may narrate their own stories in a non-linear manner and add further complexity (36). Although this is a strength of this modality, the researcher must be able to allow for these deviations and help participants describe their experiences in their own way. It is also critical that the visual timelines be discrete when addressing sensitive topics such as sex to protect participants’ privacy. Predefined labels (e.g., specific emotions or relationships) may bias participants; therefore, the researcher needs to ensure participants feel empowered to use their own words and gain insight into their meaning (36). Although not unique to visualization timelines, they rely on recall and involve the researcher actively engaging with participants such that there may be biases in participants’ responses.

Considerations for conducting online data collection with youth

In the following section, we provide practical advice for researchers considering using online methods to gather qualitative data from youth to inform intervention development. We present these recommendations in the form of questions that a researcher can ask themselves and can inform both whether and which methods are appropriate and once chosen, how to maximize their success.

What is the research question to be addressed?

Where appropriate, online technologies can expand and enhance how qualitative research is undertaken with youth. However, it is critical to ensure that the research question that needs to be addressed informs the modality used to collect the data, and not the other way around. It is easy to get excited by the novelty of online methodologies but the decision to collect online qualitative data and the method to use should be driven by the intent and desired content of the data to be collected.

Does data collection become intervention?

Although perhaps not specific to online methodologies, it is important for researchers to consider whether participation in study activities may constitute an intervention in itself. For example, in the We Prevent study described above, participants created visual timelines of their relationships. Their critical reflection on the timelines may have constituted intervention, prompting them to make changes to their relationships. Additionally, Ybarra et al. [2014] (24) found that the majority of the 75 sexual minority male adolescents who participated in an asynchronous FGDs study reported either changes in attitudes or views about sex, plans regarding future behavior change and/or a reduced sense of isolation (24). On a practical note, participants who are involved in the online formative phase should not be included in the testing of the eventual intervention to avoid contamination effects.

What technology platform should be used to collect formative data?

It is critical to ensure that platforms used to engage and communicate with youth are secure, easy to use, and function even in low-bandwidth settings. Further, protecting the privacy and confidentiality of participants must be a critical factor influencing choice. Once selected, it is equally important that participants understand the potential for confidentiality breaches and informed of ways to avoid them (e.g., ensuring they have access to a private space when using web camera, reminding them to log-out of online focus group platform after posting).

Should the entire research process occur online?

Some researchers have adopted hybrid models in which online recruitment is augmented by in-person events to create greater buy-in and build initial rapport thereby minimizing the potential for dropouts, poor attendance, and limited engagement. Recently, Zhang et al. [2019] conducted an innovation contest to help design a sexual health campaign and employed both in-person and online engagement strategies. A total of 96 image submissions from 76 participants were received over the 43 days that the contest was open. Participants were twice as likely to have learned about the contest through an in-person event compared to on social media (62). Having some in-person or even telephone confirmation can also serve to assist with minimizing fraudulent participants.

Who will be conducting/moderating the data collection activities?

While this is a question that is relevant to in-person qualitative inquiry, researchers conducting activities online must possess an additional set of skills. First, moderators must themselves be adept in using the chosen technology platform including being able to troubleshoot any issues that may arise with participants. Employing moderators who are able to build rapport and quickly put participants at ease is critical for activities using videoconferencing technologies. Moderators must devote their full attention to participants and ensure that distractions (e.g., answering a text message) and/or disturbances (other research staff entering the room) are rare.

Conclusions

Innovations in online technologies offer researchers viable, cost-effective and valuable means to collect formative data. The examples provided in this paper demonstrate the acceptability and feasibility of using a range of community-centered online qualitative methodologies with adolescents and youth to inform HIV prevention interventions. Each of these modalities offers unique advantages for research, specifically when working with youth in geographically dispersed areas and engaging youth perspectives on sensitive topics. The use of these methodological innovations has the potential to better position HIV prevention interventions in impacting and meeting the unique needs of youth.

Acknowledgments

We gratefully thank all of the adolescents and young adults who participated in these studies; study staff members; and colleagues for their valuable contributions to the implementation of these projects. We also thank Dr. Sonia Lee for her support of this work.

Funding: This work was supported by Eunice Kennedy Shriver National Institute of Child Health and Human Development grant (U19HD089881).

Footnote

Provenance and Peer Review: This article was commissioned by the Guest Editor (Lisa Hightow-Weidman) for the series “Technology-based Interventions in HIV Prevention and Care Continuum among American Youth” published in mHealth. The article has undergone external peer review.

Conflicts of Interest: All authors have completed the ICMJE uniform disclosure form (available at http://dx.doi.org/10.21037/mhealth-2020-5). The series “Technology-based Interventions in HIV Prevention and Care Continuum among American Youth” was commissioned by the editorial office without any funding or sponsorship. LHW reports grants from NICHD, during the conduct of the study. The authors have no other conflicts of interest to declare.

Ethical Statement: The authors are accountable for all aspects of the work in ensuring that questions related to the accuracy or integrity of any part of the work are appropriately investigated and resolved.

Open Access Statement: This is an Open Access article distributed in accordance with the Creative Commons Attribution-NonCommercial-NoDerivs 4.0 International License (CC BY-NC-ND 4.0), which permits the non-commercial replication and distribution of the article with the strict proviso that no changes or edits are made and the original work is properly cited (including links to both the formal publication through the relevant DOI and the license). See: https://creativecommons.org/licenses/by-nc-nd/4.0/.

References

- Boulos MNK, Wheeler S, Tavares C, et al. How smartphones are changing the face of mobile and participatory healthcare: an overview, with example from eCAALYX. Biomed Eng Online 2011;10:24. [Crossref] [PubMed]

- Arya M, Kumar D, Patel S, et al. Mitigating HIV health disparities: the promise of mobile health for a patient-initiated solution. Am J Public Health 2014;104:2251-5. [Crossref] [PubMed]

- Fox S, Duggan M. Mobile Health 2012: Half of Smartphone owners use their devices to get health information and one-fifth of Smartphone owners have health apps. Pew Internet & American Life Project, California Healthcare Foundation 2012. Available online: https://www.pewresearch.org/internet/2012/11/08/mobile-health-2012/

- Terry M. Medical apps for smartphones. Telemedicine and e-Health 2010;16:17-22. [Crossref] [PubMed]

- Ybarra ML, Bull SS. Current trends in Internet-and cell phone-based HIV prevention and intervention programs. Curr HIV/AIDS Rep 2007;4:201-7. [Crossref] [PubMed]

- Catalani C, Philbrick W, Fraser H, et al. mHealth for HIV treatment & prevention: a systematic review of the literature. Open AIDS J 2013;7:17. [Crossref] [PubMed]

- Cornelius JB, Cato M, St Lawrence J, et al. Development and pretesting multimedia HIV-prevention text messages for mobile cell phone delivery. J Assoc Nurses AIDS Care 2011;22:407. [Crossref] [PubMed]

- Cornelius JB, Cato MG, Toth JL, et al. Following the trail of an HIV-prevention Web site enhanced for mobile cell phone text messaging delivery. J Assoc Nurses AIDS Care 2012;23:255. [Crossref] [PubMed]

- Cornelius JB, Lawrence JSS, Howard JC, et al. Adolescents’ perceptions of a mobile cell phone text messaging-enhanced intervention and development of a mobile cell phone-based HIV prevention intervention. J Spec Pediatr Nurs 2012;17:61. [Crossref] [PubMed]

- Muessig KE, Pike EC, LeGrand S, et al. Mobile phone applications for the care and prevention of HIV and other sexually transmitted diseases: a review. J Med Internet Res 2013;15:e1 [Crossref] [PubMed]

- Kelly JA, Somlai AM, DiFranceisco WJ, et al. Bridging the gap between the science and service of HIV prevention: transferring effective research-based HIV prevention interventions to community AIDS service providers. Am J Public Health 2000;90:1082. [Crossref] [PubMed]

- Higgins DL, O'Reilly K, Tashima N, et al. Using formative research to lay the foundation for community level HIV prevention efforts: an example from the AIDS Community Demonstration Projects. Public Health Rep 1996;111:28-35. [PubMed]

- Fisher PA, Ball TJ. Balancing empiricism and local cultural knowledge in the design of prevention research. J Urban Health 2005;82:iii44-55. [Crossref] [PubMed]

- Wandersman A, Florin P. Community interventions and effective prevention. Am Psychol 2003;58:441. [Crossref] [PubMed]

- Gill P, Baillie J. Interviews and focus groups in qualitative research: an update for the digital age. Br Dent J 2018; [Crossref] [PubMed]

- Gill P, Stewart K, Treasure E, et al. Methods of data collection in qualitative research: interviews and focus groups. Br Dent J 2008;204:291-5. [Crossref] [PubMed]

- Halcomb EJ, Gholizadeh L, DiGiacomo M, et al. Literature review: considerations in undertaking focus group research with culturally and linguistically diverse groups. J Clin Nurs 2007;16:1000-11. [Crossref] [PubMed]

- Krueger RA, King JA. Involving Community Members in Focus Groups. Thousand Oaks, CA, USA: Sage Publications, 1998.

- Gulliver A, Griffiths KM, Christensen H. Perceived barriers and facilitators to mental health help-seeking in young people: a systematic review. BMC Psychiatry 2010;10:113. [Crossref] [PubMed]

- Cantrell J, Hair EC, Smith A, et al. Recruiting and retaining youth and young adults: challenges and opportunities in survey research for tobacco control. Tob Control 2018;27:147-54. [Crossref] [PubMed]

- Thomas C, Wootten A, Robinson P. The experiences of gay and bisexual men diagnosed with prostate cancer: Results from an online focus group. Eur J Cancer Care (Engl) 2013;22:522-9. [Crossref] [PubMed]

- Mann C, Stewart F. Internet communication and qualitative research: A handbook for researching online. London, UK: Sage, 2000.

- Fox FE, Morris M, Rumsey N. Doing synchronous online focus groups with young people: Methodological reflections. Qual Health Res 2007;17:539-47. [Crossref] [PubMed]

- Ybarra ML, DuBois LZ, Parsons JT, et al. Online focus groups as an HIV prevention program for gay, bisexual, and queer adolescent males. AIDS Educ Prev 2014;26:554-64. [Crossref] [PubMed]

- Stewart K, Williams M. Researching online populations: the use of online focus groups for social research. Qual Res 2005;5:395-416. [Crossref]

- Han J, Torok M, Gale N, et al. Use of Web Conferencing Technology for Conducting Online Focus Groups Among Young People With Lived Experience of Suicidal Thoughts: Mixed Methods Research. JMIR Ment Health 2019;6:e14191 [Crossref] [PubMed]

- Hightow-Weidman LB, Muessig K, Rosenberg E, et al. University of North Carolina/Emory Center for Innovative Technology (iTech) for Addressing the HIV Epidemic Among Adolescents and Young Adults in the United States: Protocol and Rationale for Center Development. JMIR Res Protoc 2018;7:e10365 [Crossref] [PubMed]

- Chawla S, Hartline JD, Sivan B. Optimal crowdsourcing contests. Games Econ Behav 2019;113:80-96. [Crossref]

- Bayus BL. Crowdsourcing New Product Ideas over Time: An Analysis of the Dell IdeaStorm Community. Manage Sci 2012;59: [Crossref]

- Mathews A, Farley S, Blumberg M, et al. HIV cure research community engagement in North Carolina: a mixed-methods evaluation of a crowdsourcing contest. J Virus Erad 2017;3:223-8. [Crossref] [PubMed]

- Ong JJ, Bilardi JE, Tucker JD. Wisdom of the Crowds: Crowd-Based Development of a Logo for a Conference Using a Crowdsourcing Contest. Sex Transm Dis 2017;44:630-6. [Crossref] [PubMed]

- Hildebrand M, Ahumada C, Watson S. CrowdOutAIDS: crowdsourcing youth perspectives for action. Reprod Health Matters 2013;21:57-68. [Crossref] [PubMed]

- Tang W, Wei C, Cao B, et al. Crowdsourcing to expand HIV testing among men who have sex with men in China: A closed cohort stepped wedge cluster randomized controlled trial. PLoS Med 2018;15:e1002645 [Crossref] [PubMed]

- Tang W, Ritchwood TD, Wu D, et al. Crowdsourcing to Improve HIV and Sexual Health Outcomes: a Scoping Review. Curr HIV/AIDS Rep 2019;16:270-8. [Crossref] [PubMed]

- Muessig KE, Knudston KA, Soni K, et al. "I didn't tell you sooner because I didn't know to tandle it myself." Developing a virtual reality program to support HIV-status disclosure decisions. Digit Cult Educ 2018;10:22-48. [PubMed]

- Goldenberg T, Finneran C, Andes KL, et al. Using participant-empowered visual relationship timelines in a qualitative study of sexual behaviour. Glob Public Health 2016;11:699-718. [Crossref] [PubMed]

- Lys C, Gesink D, Strike C, et al. Body mapping as a youth sexual health intervention and data collection tool. Qual Health Res 2018;28:1185-98. [Crossref] [PubMed]

- Plunkett R, Leipert BD, Ray SL. Unspoken phenomena: Using the photovoice method to enrich phenomenological inquiry. Nurs Inq 2013;20:156-64. [Crossref] [PubMed]

- Bagnoli A. Beyond the standard interview: The use of graphic elicitation and arts-based methods. Qual Res 2009;9:547-70. [Crossref]

- Namey EE, Guest G, Mitchell ML. Collecting qualitative data or applied research. California, USA: Sage, 2012.

- Bourey C, Stephenson R, Bartel D, et al. Pile sorting innovations: Exploring gender norms, power and equity in sub-Saharan Africa. Glob Public Health 2012;7:995-1008. [Crossref] [PubMed]

- Belli RF. The structure of autobiographical memory and the event history calendar: Potential improvements in the quality of retrospective reports in surveys. Memory 1998;6:383-406. [Crossref] [PubMed]

- Gamarel KE, Darbes LA, Hightow-Weidman L, et al. The development and testing of a relationship skills intervention to improve HIV prevention uptake among young gay, bisexual, and other men who have sex with men and their primary partners (we prevent): Protocol for a randomized controlled trial. JMIR Res Protoc 2019;8:e10370 [Crossref] [PubMed]

- Reid DJ, Reid FJM. Online focus groups: An in-depth comparison of computer-mediated and conventional focus group discussions. Int J Mark Res 2005;47:131-62. [Crossref]

- Walston JT, Lissitz RW. Computer-mediated focus groups. Evaluation Review 2000;24:457-83. [Crossref] [PubMed]

- Reisner SL, Randazzo RK, White Hughto JM, et al. Sensitive health topics with underserved patient populations: Methodological considerations for online focus group discussions. Qual Health Res 2018;28:1658-73. [Crossref] [PubMed]

- Prescott TL, Phillips II G, DuBois LZ, et al. Reaching adolescent gay, bisexual, and queer men online: development and refinement of a national recruitment strategy. J Med Internet Res 2016;18:e200 [Crossref] [PubMed]

- Zwaanswijk M, van Dulmen S. Advantages of asynchronous online focus groups and face-to-face focus groups as perceived by child, adolescent and adult participants: a survey study. BMC Res Notes 2014;7:756. [Crossref] [PubMed]

- van Eeden-Moorefield B, Proulx CM, Pasley K. A comparison of internet and face-to-face (FTF) qualitative methods in studying the relationships of gay men. J GLBT Fam Stud 2008;4:181-204. [Crossref]

- Abrams KM, Wang Z, Song YJ, et al. Data richness trade-offs between face-to-face, online audiovisual, and online text-only focus groups. Soc Sci Comp Rev 2015;33:80-96. [Crossref]

- Kite J, Phongsavan P. Insights for conducting real-time focus groups online using a web conferencing service. F1000Res 2017;6:122. [Crossref] [PubMed]

- Denscombe M. The good research guide Maidenhead. England, UK: Open University Press, 2003.

- Mann C, Stewart F. Internet interviewing. Handbook of Interview Research: Context and Method 2002;29:603-27.

- Woodyatt CR, Finneran CA, Stephenson R. In-person versus online focus group discussions: A comparative analysis of data quality. Qual Health Res 2016;26:741-9. [Crossref] [PubMed]

- Schneider SJ, Kerwin J, Frechtling J, et al. Characteristics of the discussion in online and face-to-face focus groups. Soc Sci Comp Rev 2002;20:31-42. [Crossref]

- Matthews J, Cramer EP. Using technology to enhance qualitative research with hidden populations. Qual Rep 2008;13:301-15.

- Graffigna G, Bosio AC. The influence of setting on findings produced in qualitative health research: A comparison between face-to-face and online discussion groups about HIV/AIDS. Int J Qual Methods 2006;5:55-76. [Crossref]

- Tuttas CA. Lessons learned using web conference technology for online focus group interviews. Qual Health Res 2015;25:122-33. [Crossref] [PubMed]

- Cantrell MA, Lupinacci P. Methodological issues in online data collection. J Adv Nurs 2007;60:544-9. [Crossref] [PubMed]

- Boydell N, Fergie G, McDaid L, et al. Avoiding pitfalls and realising opportunities: Reflecting on issues of sampling and recruitment for online focus groups. Int J Qual Methods 2014;13:206-23. [Crossref] [PubMed]

- DuBois LZ, Macapagal KR, Rivera Z, et al. To have sex or not to have sex? An online focus group study of sexual decision making among sexually experienced and inexperienced gay and bisexual adolescent men. Arch Sex Behav 2015;44:2027-40. [Crossref] [PubMed]

- Zhang Y, Tang S, Li K, et al. Quantitative evaluation of an innovation contest to enhance a sexual health campaign in China. BMC Infect Dis 2019;19:112. [Crossref] [PubMed]

Cite this article as: Gamarel KE, Stephenson R, Hightow-Weidman L. Technology-driven methodologies to collect qualitative data among youth to inform HIV prevention and care interventions. mHealth 2021;7:34.