Evaluating study procedure training methods for a remote daily diary study of sexual minority women

Introduction

Ecological momentary assessment (EMA) has emerged as a methodology that allows researchers to examine thoughts, feelings, and behaviors in real-time. The term EMA is used to describe a range of assessment methods that involve collecting data one or more times throughout the day over a specified time period (typically days or weeks). As mobile technology has advanced, self-report EMA data collection is often accomplished using small electronic devices, such as smartphones. The benefits of EMA are discussed at length elsewhere (1-5), but in brief, EMA has several advantages over cross-sectional and traditional longitudinal designs: it minimizes recall bias in self-report assessments, provides data to examine both between- and within-person differences and temporal associations, and maximizes the ecological validity of the data being collected by allowing researchers to remotely assess behaviors as well as internal and physiological states as they naturally occur in daily life (1,6).

An additional logistical advantage of EMA methods is that research can be conducted remotely, with participants never having to come into a research office. Remote assessment can be particularly useful when assessing the daily life of hard-to-reach populations, as gathering adequately sized samples requires a large recruitment radius, making it nearly impossible to meet face-to-face with all participants. One such population that poses recruitment challenges is sexual minority individuals (e.g., lesbian, gay, bisexual), who, in the U.S., are marginalized as well as geographically dispersed (7). Although being able to remotely assess such populations is a notable advantage of EMA, these studies are often complex and finding ways to engage potential participants from afar can be challenging.

Researchers face a variety of obstacles when attempting to recruit and engage hard-to-reach populations that includes obtaining high quality samples of relatively small populations (8). The use of EMA methods adds an extra layer of difficulty to this process given that EMA may require participants to use familiar devices in new ways (e.g., accessing email/text messages regularly to complete surveys, using an app to answer surveys) or even to use devices that may be new to them entirely (e.g., smartphones, wearable accelerometers). Prior research suggests that beyond the equipment itself, participants may also experience uncertainty about how to answer survey prompts (9). The novelty of the EMA equipment and procedures in addition to the responsibility of engaging in study procedures each day can be burdensome for participants (9). It can also be challenging for researchers to appropriately engage participants to ensure sufficiently high compliance with the study protocol (10,11). To increase study compliance and data quality, EMA best practices suggest that it is essential to train participants on all EMA procedures, including how to adequately complete and manage the prompts (9,10,12). This is most commonly done in person to allow researchers to explain the procedures directly. However, when assessing hard-to-reach populations, it is nearly impossible to provide in-person training if participants are geographically dispersed.

One way in which EMA researchers might address the issue of training remote samples is by creating training videos to stand in lieu of in-person training sessions. The use of video as a substitute for face-to-face training has become increasingly common in education and industry, allowing individuals more flexibility in their often-busy schedules (13). Results from the online education literature suggest there are no differences in comprehension between students who receive instruction via video versus in person (13). In addition, past research suggests the use of video instructions is useful in teaching skills, such as implementing behavior-analytic techniques often used in autism interventions (14) and increasing patient compliance in completing preparation tasks prior to medical procedures (15). Research has also found that compared to written materials, video instructions increased participant performance in software training tasks (16), suggesting that video may be an appropriate mode to instruct participants on using new technology.

Given the promising findings of previous research using video instructions, the primary aim of the present study was to experimentally examine whether adding videos to more commonly used written recruitment materials would improve study consent, reduce study dropout, and improve survey completion rates for a remote study that collected daily data during a two-week period from sexual minority women living across the U.S. As part of a larger study (17), an experimental design feature was built in such that participants were assigned to either receive written materials only or receive the same written materials plus the information displayed in video format. A secondary aim of the present study was to examine whether time spent reviewing the online video materials was associated with the aforementioned outcomes, as this may indicate if engagement with the videos led to better outcomes.

We present this article in accordance with the MDAR checklist (available at http://dx.doi.org/10.21037/mhealth-20-116).

Methods

Participants and recruitment

As part of a larger study, 376 young sexual minority women (188 female same-sex couples) were recruited by a market research firm that specializes in recruiting LGBTQ (lesbian, gay, bisexual, transgender, queer or questioning) individuals. All participants met the following criteria: age 18–35, identifies as cisgender female, in a romantic relationship with a woman for at least 3 months, sees partner in person at least once a week, and able to respond to daily surveys between 6 am and 12 pm. Both members of the couple had to be willing to participate to be included. As the larger study focused on relationship factors and alcohol use, in order for a couple to be eligible for the study, at least one participant in each couple had to report being only or mostly attracted to women, had to drink at least three days in the past two weeks, and have at least one binge drinking episode (i.e., 4 or more drinks in one sitting) in the past two weeks. In addition, participants were ineligible if their partner did not consent (i.e., both partners had to consent) or if their partner did not complete the baseline survey (i.e., both partners had to complete baseline prior to being eligible for the daily surveys). In order to participate in the study, all participants had to provide informed consent.

Figure 1 shows a flow diagram of participants as they moved through the study. There was some complexity in the enrollment process in that participant eligibility was contingent not only on their own responses, but also their partners’ responses. For example, as shown in Figure 1, there were two separate phases when participants were withdrawn from the study by the researchers: first, 17 participants who consented to participate were withdrawn because their partners did not consent, and second, one additional participant was withdrawn by the researchers after consenting and completing the baseline survey because her partner did not complete the baseline survey. There were also three different times in the study when participants dropped out: prior to completing the baseline survey, prior to beginning the daily surveys, or during the daily surveys.

Demographic data are only available for participants who were eligible to participate and completed the baseline survey. In total, 326 women provided demographic information, with a mean age of 27.57 years (SD =3.65, range, 19–35). Most (88.3%, n=288) identified as non-Latina. Participants predominately identified as White/Caucasian (71.5%, n=233), with a minority identifying as multiracial (10.4%, n=34), Black/African American (8.6%, n=28), Asian/Asian American (5.8%, n=19), American Indian/Alaska Native (0.6%, n=2), or another race (3.1%, n=10).

Written and video materials

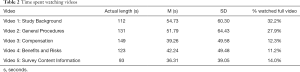

Written and video materials were developed to explain the purpose of the study, the process for completing the baseline and daily surveys, and the risks and benefits of participation. The introductory materials also included a statement about the researchers’ commitment to being inclusive and sensitive, and noted that some questions may not be ideally phrased (e.g., terminology regarding sexual minority individuals in some well-validated questionnaires may be dated). The written materials corresponded to the information as what was presented in the videos, and both conditions included information similar to what would be presented to participants as part of the screening/recruitment process and informed consent process of an in-person study that involved EMA methods. The written content that was presented to all participants is available as part of the larger study protocol (17). Based on the written materials, five videos were professionally developed. Each video showed a member(s) of the research team in an office setting describing the relevant study-related material. Each video was brief, ranging from 1 minute and 33 seconds to 2 minutes and 29 seconds (total video time: 10 minutes 8 seconds; average video time: 2 minutes 2 seconds). The five video topics were: (I) background on the study; (II) general study procedures; (III) compensation information; (IV) benefits and risks; (V) survey content information.

To deliver the written and video materials, two separate online surveys were developed, one for the written-only content and one for the written + video content. The content was presented on five separate “pages” and participants were required to progress through them in order. For the written-only condition, participants only saw the written information regarding the study. For the written + video condition, participants saw the video and could scroll down on the page to view the text. The video on each page began automatically and participants could pause or stop the video at any time. They also were able to progress to the next page without finishing the video (although they were not explicitly told this was an option or encouraged to do so). The survey automatically tracked the time participants spent on each page (i.e., reviewing videos and/or written materials). We did not have a video only condition (without any written materials) because most EMA studies provide written materials during in-person studies, so it would be unusual to only provide verbal (or video) instructions.

Procedures

The study procedure was reviewed by the Old Dominion University Institutional Review Board (#839097) and it conforms to the provisions of the Helsinki Declaration (as revised in 2013). To evaluate the effectiveness of the videos, couples were randomized in blocks of six to either the written + video group (videos plus corresponding written materials) or written-only group (only received information in the written format). Couples were randomized, as opposed to individuals, to reduce the possibility of contamination between partners. Participants were blind to condition but the researchers were not, as they had to email the appropriate instructions to participants. Once a couple expressed interest in the study and was determined to be eligible, each person received a separate email from research staff informing them of their eligibility and reminding them that informed consent was needed from both partners to participate. This email also contained the link to either the written + video group or written-only group online survey. The survey for the written + video group included the five videos with the corresponding written materials below each video, while the survey for the written-only groups only contained the text information.

After reviewing the introductory information (either written or written + video), participants were presented with the informed consent document. If participants did not complete the review of information and consent within 2–3 days, they received a maximum of two reminder emails (approximately 2–3 days apart). To continue in the larger study, both partners in the couple were required to provide informed consent before being sent the baseline survey. Upon completion of the baseline surveys by both partners, participants started the 14 days of daily surveys followed by an end of study survey. All of the surveys were distributed online, delivered via email, and completed by participants remotely (i.e., no face-to-face contact with the research team). Surveys could be completed in their web browser, so no specific apps were required. Each participant could receive up to $77 for her participation ($25 USD for completing the baseline survey, $3 USD for each daily survey, and $10 USD as a bonus for completing at least 80% of the daily surveys). Additional procedural details for the larger study are reported elsewhere (17).

Measures

To examine the primary aim of the present study, the experimental conditions (written + video and written-only) were compared across protocol outcome measures, including consent rates, dropout rates, and daily diary survey completion rates. Below is a description of how each of these constructs was operationalized in this study.

Consent

Consent was determined based on whether participants decided to participate or not after reviewing the introductory materials and consent form. This was coded as 0 (no) or 1 (yes) for all individuals who received the emailed invitation with study materials and accessed the video/written materials.

Drop out

After consenting to participate, there were three different times when participants dropped out of the study, as shown in Figure 1. Participants were considered dropped out if they did not complete the baseline survey, did not complete any of the daily surveys, or if they requested to drop out during the 14 days of daily surveys. Given the low dropout rates, we created a composite variable that represents whether participants dropped out at any time during the study, which was coded as 0 (no) or 1 (yes).

Daily diary survey completion

The daily diary completion was the total number of daily surveys completed by participants. This was calculated for all individuals who consented to participate, completed baseline, and did not drop out of the study.

Video viewing characteristics

For participants assigned to the written + video condition, we calculated the time spent on the video pages, the number of videos watched in full, and whether any videos were watched in full. Although we cannot know if participants actually watched the videos, the video started automatically on each page and the time participants spent on each page was recorded. To calculate the total time spent on the videos we summed the time spent on each of the five video pages. There were instances when participant spent a very long time on a video (presumably they walked away from the screen and/or were doing something else after the video finished). In order to not inflate the time spent on the videos, in cases where participants spent a very long time on the page (operationalized as more than double the length of the video) the length of time was reduced to double the length of time of the video (with the idea being that some participants may have wanted to watch a video again). The number of videos watched in full was calculated (with a buffer of 10 seconds, i.e., if participants stopped the video with 10 seconds or less to go, it was counted as watched in full), and ranged from 0 to 5. Finally, we were interested in whether participants watched any video in full, which was coded as 1 (yes) or 0 (no).

Data analytic plan

Prior to conducting analyses, we first examined whether the two groups were equivalent on available demographic and study-eligibility characteristics. Then, given that individuals (level 1) were nested within couples (level 2), all analyses were conducted using multilevel modeling using the HLM software (18). For each analysis, deviance statistics were compared for models with random versus fixed slopes, and the random slope was kept in the final model only if it significantly improved model fit or the slope had significant variability (time spent watching videos predicting completion rate and number of videos watched predicting completion rate). For all other analyses, the models with fixed slopes were reported. Time spent watching the videos and number of videos watched were both grand-mean centered for the multilevel analysis. The full sample was used for analyses where group assignment (written-only or written + video) predicted consent, dropout, and survey completion rate in separate models. The sample was then narrowed to only the written + video group for analyses where video watching (time spent, number of videos, or watching any videos) predicted consent, dropout, and survey completion rate in separate models.

Results

Consent, dropout, and daily survey completion

The two groups were first compared on screening survey characteristics to examine whether randomization worked. The groups were not significantly different across age, attraction, sexual identity, time in relationship, number of drinking days in the past two weeks, or highest number of drinks. The proportion of participants seeing their partner 6-7 days per week was significantly higher in the written-only group (n=161; 88.0%) than the written + video group (n=138; 75.8%). However, this variable (frequency of seeing their partner) was not significantly associated with any of the outcome variables (i.e., rates of consent, dropout, or compliance) and thus was not included as a covariate in the main analyses.

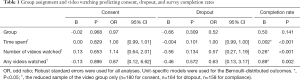

Table 1 presents the effect of group on consent rates, dropout, and daily survey completion rates. Across both experimental groups, the number of eligible participants who reviewed the written-only/written + video materials was very high with 365 of the 376 potentially eligible women (97.1%) accessing the online survey they were sent with the introductory materials. Of the 365 women who reviewed the materials, 96.7% (n=353) consented. As shown in Table 1, consent rates did not differ by experimental condition (P=0.968), with 96.7% of each group consenting to participate (i.e., 6 participants in each group did not consent).

Full table

Regarding study dropout, overall dropout was low. As shown in Figure 1, a total of 18 participants dropped out of the study (9 did not complete baseline, 5 did not begin the daily surveys, and 4 requested to drop out during the daily surveys) and 317 individuals successfully completed the study, indicating that 5.4% (18 of 335) of eligible and consented participants dropped out of the study. Six participants in the written + video group dropped out (3.6%; 6 of 164), and 12 participants in the written-only group dropped out (7.0%; 12 of 171). As shown in Table 1, there was no significant difference in dropout rates between the two groups (P=0.309).

Of the 317 participants who completed the daily portion of the survey, overall daily survey completion rates were high with participants completing a mean of 12.66 (SD =2.34) of the 14 daily surveys (90.4%). Participants who received the written-only materials completed a mean of 12.37 (SD =2.53) days of the daily surveys (88.4%) and those assigned to the written + video group completed a mean of 12.95 (SD =2.11) days of the daily surveys (92.5%); these group differences were not statistically significant as is shown in Table 1 (P=0.141).

Video viewing characteristics

A secondary aim of the study was to explore the timing data from those participants who were assigned to the written + video condition. Overall, participants spent an average of 293.97 seconds (SD =227.95) or 4 minutes and 54 seconds reviewing the videos, which is about half of the total time of all videos (10 minutes 8 seconds total). As described previously, we also created a variable that reflected whether a participant watched each video in full. Across the sample of participants in the video condition, 6.7% (n=12) watched all of the videos in full, 1.7% (n=3) watched four videos, 5.0% (n=9) watched three videos, 8.9% (n=16) watched two videos, 24.4% (n=44) watched one video, and 53.3% (n=96) did not watch any of the videos in full. Table 2 presents the actual duration of each video, mean length of time participants spent watching each video, and the percent of participants who watched each video in full. Next, we explored how time spent watching the videos was associated with our primary outcome variables. As is shown in Table 1, participants who spent more time watching the videos, watched more of the videos in full, and watched at least one video in full had higher survey completion rates than participants spending less time watching videos and watching fewer videos in full (Ps<0.002). There were no effects of any of these video watching characteristics on consent or dropout rates.

Full table

Discussion

The goal of the present study was to examine if video presentation of information improved study consent, reduced study dropout, and/or improved daily survey completion rates among a hard-to-reach population accessed remotely. This was accomplished by embedding an experimental design into a larger study that enrolled same-sex female couples who completed a 14-day daily diary protocol. This was one of the first studies that used remote online daily diary collection methods with same-sex female couples, and thus, when planning this study, we were concerned about participant engagement and compliance with the study procedures. As a result, we developed a series of videos to explain the research goals and procedures to participants, and decided to build a design feature into the study to evaluate the utility of these video instructions. In sum, we had very high consent rates (97.1%), very low dropout rates (5.4%) and very high daily survey completion rates (90.4%) across both the written-only and written + video groups, and no significant group differences were seen on any of these three outcome measures, likely, at least in part, due to ceiling and floor effects.

Although sexual minority individuals may be considered a “hard-to-reach” population, our potential participants appeared highly motivated to take part in research, and thus our findings suggest video recruitment materials were not necessary to improve participation in this study. It is likely that our sample, recruited by a market research firm that conducted initial screening of participants prior to referring them to our research team, was already familiar with online survey completion and the incentives for doing so. Further, their membership on the market research firm’s panel reflects motivation to participate in research projects. However, it should be noted that it is likely only one partner within the couple was in the firm’s panel prior to our study, and thus, at least half of our sample may not have been familiar with participating in psychological or health research prior to enrolling in this study. Nonetheless, the current sample may not be representative of a more general sample of sexual minority women in terms of motivation, experience with research, and familiarity with online data collection. People who are less familiar with research may need the additional modality of video instructions to complete the study successfully. In addition, the present study involved completing 14 daily surveys, with participants receiving an email each morning with the survey link. Given that our participants were all young adults ages 18–35, this is likely a task for that they already had considerable familiarity. More complex study designs—such as EMA studies with multiple daily prompts or the need to self-initiate surveys, or studies requiring participants to learn how to use an app on a smartphone or other assessment devices (e.g., accelerometers)—may require more extensive instructions and training, even if the sample is highly motivated for research.

In addition to our primary outcome analyses, we also explored whether characteristics of video viewing were associated with consent rates, dropout rates, and/or daily survey completion. We found that in the written + video group, participants who spent more time viewing the videos also completed more of the daily surveys. Although this finding may indicate that viewing the videos increased participants’ interest and investment in the study, it is also possible (and probably more likely) that participants who were more compliant with the instructions to view the videos were also more compliant with completing the daily surveys due to underlying personality characteristics (e.g., conscientiousness). This finding could have two potential implications for future study designs. First, researchers could use pre-study activities (e.g., watching videos, reviewing study instructions, completing practice surveys) as a way to identify participants who may be at risk for study non-compliance. This approach has been used in previous EMA studies where researchers use a “practice phase” of the EMA portion of the study, which consists of completing the EMA protocol for several days, but these data are not used in analyses (19); for a few examples, see Chen, Cordier, and Brown (20) and two studies described in Zawadzki et al. (21). At the end of this period, participants’ compliance issues could be addressed in several ways prior to continuing data collection. For example, in the Effects of Stress on Cognitive Aging, Physiology and Emotion (ESCAPE) Project, participants completed a 2-day “practice phase” of EMA data collection and only participants who completed at least 80% of all assessments during the practice phase were eligible to continue in the study (22), which can help to improve compliance for the final sample. Second, an alternative to requiring a minimum level of compliance would be to use data collected during a training or practice phase to inform the level of support that a given participant may require to achieve adequate compliance. For instance, participants who are non-compliant (or less compliant) with training or practice activities could receive additional support or reminders during the study. In order to help enhance our understanding of methodological and training issues in EMA studies, researchers could use sequential, multiple assignment, randomized trails (SMARTs), whereby participants are randomly assigned to different follow-up or support conditions at various stages of the study (23). Such an approach could help inform future research in determining the optimal level of support that participants need, without allocating excessive resources to training of participants who may be compliant with more minimal support.

Limitations and future directions

There are several limitations in this study that must be acknowledged. These findings may not generalize to those less familiar with research, other age groups beyond young adults, and to those participating in studies using more complex data collection procedures involving devices such as an accelerometer or an app. Further, the degree to which participating as a couple may have enhanced compliance compared to individual participation is not known. Examining the benefit of video instructions or alternate training methods across a wide variety of EMA study designs, age groups, and participant characteristics is warranted. Future research using embedded design features to increase our understanding of how to maximize quality of EMA study design and implementation is essential. Information generated from these experimental manipulations may be especially important for populations less inclined to participate in EMA studies, who are less familiar with research, and who participate in studies with more complex designs.

Conclusions

The embedded experimental design of this study permitted investigation of whether video-instructions were “value-added” in terms of participant training and compliance outcomes in a 14-day daily diary study in a sample of female same-sex couples. The present sample appears to be highly motivated, and thus, compliance with the study protocol was excellent overall. Video instructions did not result in increased likelihood of consent, decreased likelihood of dropout, or contribute to more completed daily surveys. It is important to replicate these findings in samples less inclined to participate in, and less familiar with research, as well as for studies with more complex protocols (e.g., involving learning a new app or device, or participant-initiated surveys). Understanding best practices for remotely training participants is essential as researchers increasingly use complex EMA designs to collect data in real life and real time.

Acknowledgments

Funding: Research reported in this publication was supported by the National Institute on Alcohol Abuse and Alcoholism of the National Institutes of Health under Award Number R15AA020424 (PI: Robin Lewis) and the National Institute on Minority Health and Health Disparities under award number R01MD012598 (PI: Kristin Heron). The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health.

Footnote

Reporting Checklist: The authors have completed the MDAR checklist. Available at http://dx.doi.org/10.21037/mhealth-20-116

Data Sharing Statement: Available at http://dx.doi.org/10.21037/mhealth-20-116

Conflicts of Interest: All authors have completed the ICMJE uniform disclosure form (available at http://dx.doi.org/10.21037/mhealth-20-116). Kristin E. Heron serves as an unpaid editorial board member of mHealth from February 2019 to January 2021. The other authors have no conflicts of interest to declare.

Ethical Statement: The authors are accountable for all aspects of the work in ensuring that questions related to the accuracy or integrity of any part of the work are appropriately investigated and resolved. The study procedure was reviewed by the Old Dominion University Institutional Review Board (#839097) and it conforms to the provisions of the Helsinki Declaration (as revised in 2013). Informed consent was taken from all participants.

Open Access Statement: This is an Open Access article distributed in accordance with the Creative Commons Attribution-NonCommercial-NoDerivs 4.0 International License (CC BY-NC-ND 4.0), which permits the non-commercial replication and distribution of the article with the strict proviso that no changes or edits are made and the original work is properly cited (including links to both the formal publication through the relevant DOI and the license). See: https://creativecommons.org/licenses/by-nc-nd/4.0/.

References

- Smyth JM, Wonderlich SA, Sliwinski MJ, et al. Ecological momentary assessment of affect, stress, and binge-purge behaviors: day of week and time of day effects in the natural environment. Int J Eat Disord 2009;42:429-36. [Crossref] [PubMed]

- Hamaker EL. Why researchers should think “within-person”: A paradigmatic rationale. In: Mehl MR, Conner TS, editors. Handbook of research methods for studying daily life. New York: The Guilford Press, 2012:43-61.

- Reis HT. Why researchers should think “real-world”: A conceptual rationale. In: Mehl MR, Conner TS. editors. Handbook of research methods for studying daily life. New York: The Guilford Press, 2012:3-21.

- Schwarz N. Retrospective and concurrent self-reports: The rationale for real-time data capture. In: Stone AA, Shiffman S, et al. editors. The science of real-time data capture: Self-reports in health research. New York: Oxford University Press, 2005:11-26.

- Schwarz N. Why researchers should think “real-time”: A cognitive rationale. In: Mehl MR, Conner TS. editors. Handbook of research methods for studying daily life. New York: The Guilford Press, 2012:22-42.

- Smyth JM, Stone AA. Ecological momentary assessment research in behavioral medicine. J Happiness Stud 2003;4:35-52. [Crossref]

- Jackson CL, Agenor M, Johnson DA, et al. Sexual orientation identity disparities in health behaviors, outcomes, and services among men and women in the United States: A cross-sectional study. BMC Public Health 2016;16:807. [Crossref] [PubMed]

- National Institutes of Health. The health of lesbian, gay, bisexual, and transgender people: Building a foundation for better understanding. Washington, DC: National Academies Press, 2011.

- Burke LE, Shiffman S, Music E, et al. Ecological momentary assessment in behavioral research: addressing technological and human participant challenges. J Med Internet Res 2017;19:e77 [Crossref] [PubMed]

- Conner TS, Lehman BJ. Getting started: Launching a study in daily life. In: Mehl MR, Conner TS. editors. Handbook of research methods for studying daily life. New York: The Guilford Press, 2012:89-107.

- Kubiak T, Krog K. Computerized sampling of experiences and behavior. In: Mehl MR, Conner TS. editors. Handbook of research methods for studying daily life. New York: The Guilford Press, 2012:124-43.

- Heron KE, Everhart RS, McHale S, et al. Using Ecological Momentary Assessment (EMA) methods with youth: A systematic review and recommendations. J Pediatr Psychol 2017;42:1087-107. [Crossref] [PubMed]

- Sun AQ, Chen X. Online education and its effective practice: A research review. J Info Tech Educ Res 2016;15:157-90. [Crossref]

- Hansard C, Kazemi E. Evaluation of video self‐instruction for implementing paired‐stimulus preference assessments. J Appl Behav Anal 2018;51:675-80. [Crossref] [PubMed]

- Prakash SR, Verma S, McGowan J, et al. Improving the quality of colonoscopy bowel preparation using an educational video. Can J Gastroenterol 2013;27:696-700. [Crossref] [PubMed]

- van der Meij H, van der Meij J. A comparison of paper-based and video tutorials for software learning. Comput Educ 2014;78:150-9. [Crossref]

- Heron KE, Lewis RJ, Shappie AT, et al. Rationale and design of a remote web-based daily diary study examining sexual minority stress, relationship factors, and alcohol use in same-sex female couples across the United States: Study protocol of project relate. JMIR Res Protoc 2019;8:e11718 [Crossref] [PubMed]

- Raudenbush SW, Bryk AS, Cheong YF, et al. HLM 7 for Windows. Lincolnwood, IL: Scientific Software International, Inc., 2011.

- Stone AA, Shiffman S. Capturing momentary, self-report data: A proposal for reporting guidelines. Ann Behav Med 2002;24:236-43. [Crossref] [PubMed]

- Chen YW, Cordier R, Brown N. A preliminary study on the reliability and validity of using experience sampling method in children with autism spectrum disorders. Dev Neurorehabil 2015;18:383-9. [Crossref] [PubMed]

- Zawadzki MJ, Scott SB, Almeida DM, et al. Understanding stress reports in daily life: A coordinated analysis of factors associated with the frequency of reporting stress. J Behav Med 2019;42:545-60. [Crossref] [PubMed]

- Scott SB, Grahm-Engeland JE, Engeland CG, et al. The effects of stress on cognitive aging, physiology, and emotion (ESCAPE) project. BMC Psychiatry 2015;15:146. [Crossref] [PubMed]

- Lei H, Nahum-Shani I, Lynch K, et al. A “SMART” design for building individualized treatment sequences. Annu Rev Clin Psychol 2012;8:21-48. [Crossref] [PubMed]

Cite this article as: Heron KE, Braitman AL, Dawson CA, MacIntyre RI, Howard LM, Lewis RJ. Evaluating study procedure training methods for a remote daily diary study of sexual minority women. mHealth 2021;7:46.